### Overview This PR introduces the following changes: 1. Adds a unified mechanism to convert ResponseItem into EventMsg. 2. Ensures that when a session is initialized with initial history, a vector of EventMsg is sent along with the session configuration. This allows clients to re-render the UI accordingly. 3. Added integration testing ### Caveats This implementation does not send every EventMsg that was previously dispatched to clients. The excluded events fall into two categories: • “Arguably” rolled-out events Examples include tool calls and apply-patch calls. While these events are conceptually rolled out, we currently only roll out ResponseItems. These events are already being handled elsewhere and transformed into EventMsg before being sent. • Non-rolled-out events Certain events such as TurnDiff, Error, and TokenCount are not rolled out at all. ### Future Directions At present, resuming a session involves maintaining two states: • UI State Clients can replay most of the important UI from the provided EventMsg history. • Model State The model receives the complete session history to reconstruct its internal state. This design provides a solid foundation. If, in the future, more precise UI reconstruction is needed, we have two potential paths: 1. Introduce a third data structure that allows us to derive both ResponseItems and EventMsgs. 2. Clearly divide responsibilities: the core system ensures the integrity of the model state, while clients are responsible for reconstructing the UI.

OpenAI Codex CLI

npm i -g @openai/codex

or brew install codex

Codex CLI is a coding agent from OpenAI that runs locally on your computer.

If you are looking for the cloud-based agent from OpenAI, Codex Web, see chatgpt.com/codex.

Quickstart

Installing and running Codex CLI

Install globally with your preferred package manager. If you use npm:

npm install -g @openai/codex

Alternatively, if you use Homebrew:

brew install codex

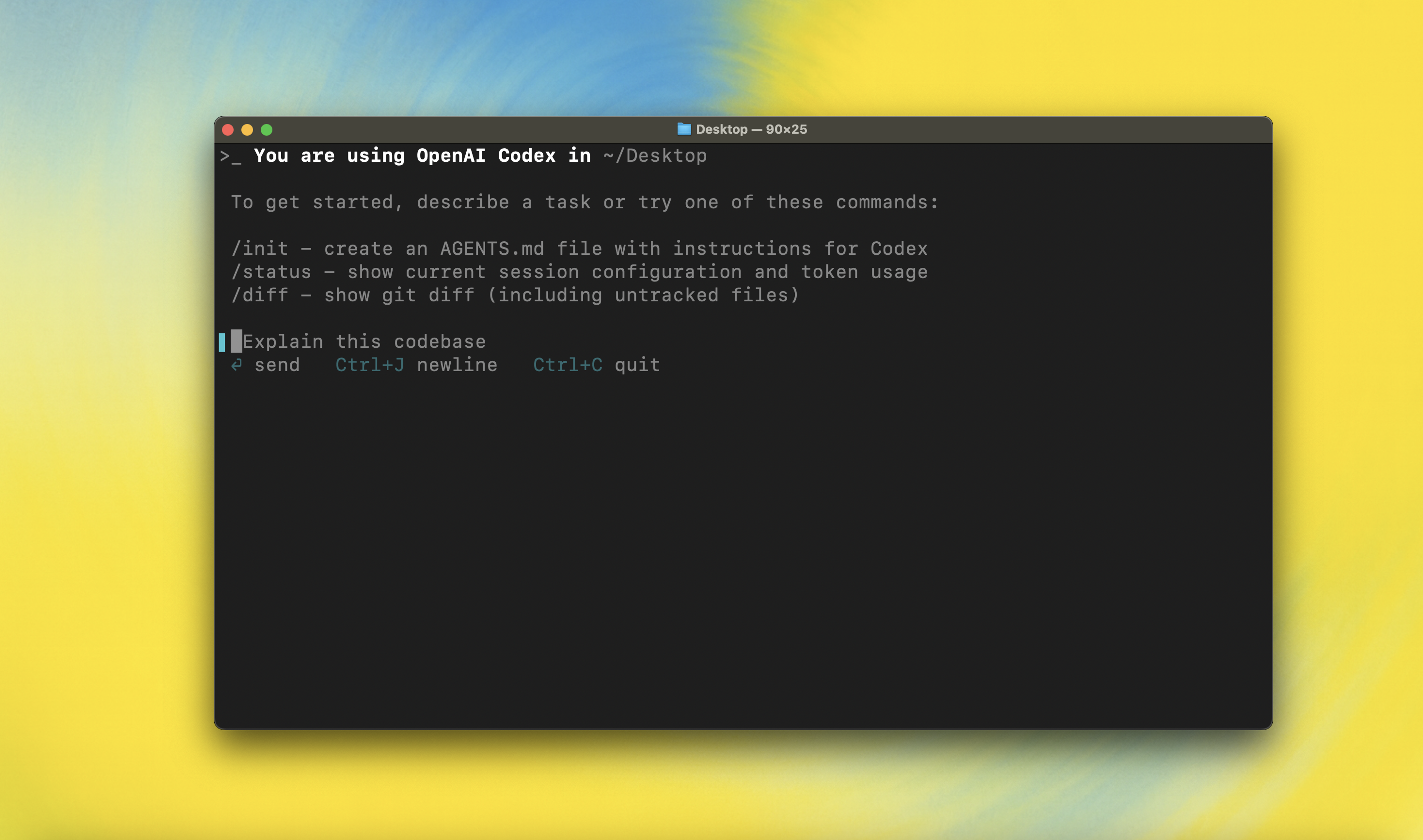

Then simply run codex to get started:

codex

You can also go to the latest GitHub Release and download the appropriate binary for your platform.

Each GitHub Release contains many executables, but in practice, you likely want one of these:

- macOS

- Apple Silicon/arm64:

codex-aarch64-apple-darwin.tar.gz - x86_64 (older Mac hardware):

codex-x86_64-apple-darwin.tar.gz

- Apple Silicon/arm64:

- Linux

- x86_64:

codex-x86_64-unknown-linux-musl.tar.gz - arm64:

codex-aarch64-unknown-linux-musl.tar.gz

- x86_64:

Each archive contains a single entry with the platform baked into the name (e.g., codex-x86_64-unknown-linux-musl), so you likely want to rename it to codex after extracting it.

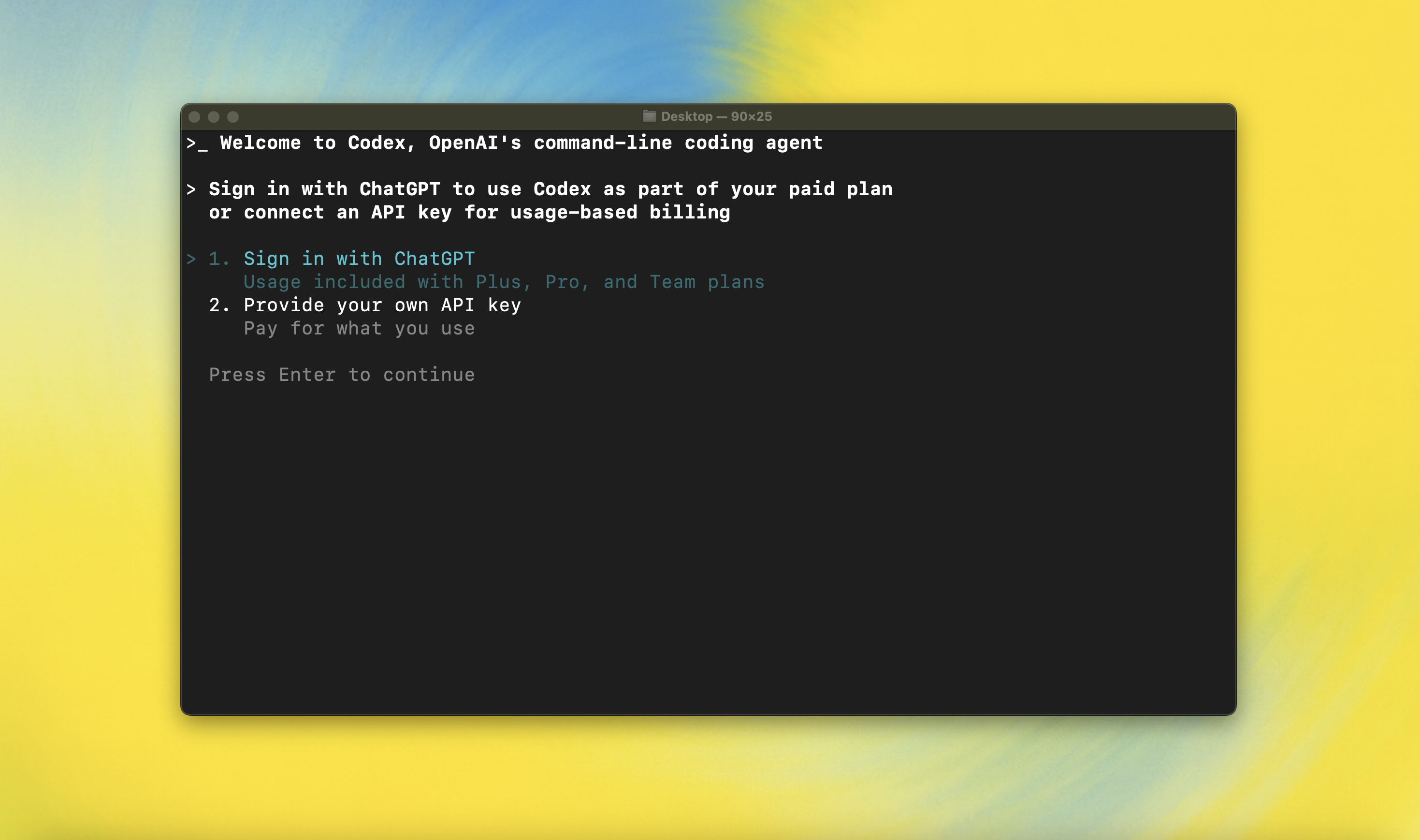

Using Codex with your ChatGPT plan

Run codex and select Sign in with ChatGPT. We recommend signing into your ChatGPT account to use Codex as part of your Plus, Pro, Team, Edu, or Enterprise plan. Learn more about what's included in your ChatGPT plan.

You can also use Codex with an API key, but this requires additional setup. If you previously used an API key for usage-based billing, see the migration steps. If you're having trouble with login, please comment on this issue.

Model Context Protocol (MCP)

Codex CLI supports MCP servers. Enable by adding an mcp_servers section to your ~/.codex/config.toml.

Configuration

Codex CLI supports a rich set of configuration options, with preferences stored in ~/.codex/config.toml. For full configuration options, see Configuration.

Docs & FAQ

- Getting started

- Sandbox & approvals

- Authentication

- Advanced

- Zero data retention (ZDR)

- Contributing

- Install & build

- FAQ

- Open source fund

License

This repository is licensed under the Apache-2.0 License.