POC code

```rust

use tokio::sync::mpsc;

use std::time::Duration;

#[tokio::main]

async fn main() {

println!("=== Test 1: Simulating original MCP server pattern ===");

test_original_pattern().await;

}

async fn test_original_pattern() {

println!("Testing the original pattern from MCP server...");

// Create channel - this simulates the original incoming_tx/incoming_rx

let (tx, mut rx) = mpsc::channel::<String>(10);

// Task 1: Simulates stdin reader that will naturally terminate

let stdin_task = tokio::spawn({

let tx_clone = tx.clone();

async move {

println!(" stdin_task: Started, will send 3 messages then exit");

for i in 0..3 {

let msg = format!("Message {}", i);

if tx_clone.send(msg.clone()).await.is_err() {

println!(" stdin_task: Receiver dropped, exiting");

break;

}

println!(" stdin_task: Sent {}", msg);

tokio::time::sleep(Duration::from_millis(300)).await;

}

println!(" stdin_task: Finished (simulating EOF)");

// tx_clone is dropped here

}

});

// Task 2: Simulates message processor

let processor_task = tokio::spawn(async move {

println!(" processor_task: Started, waiting for messages");

while let Some(msg) = rx.recv().await {

println!(" processor_task: Processing {}", msg);

tokio::time::sleep(Duration::from_millis(100)).await;

}

println!(" processor_task: Finished (channel closed)");

});

// Task 3: Simulates stdout writer or other background task

let background_task = tokio::spawn(async move {

for i in 0..2 {

tokio::time::sleep(Duration::from_millis(500)).await;

println!(" background_task: Tick {}", i);

}

println!(" background_task: Finished");

});

println!(" main: Original tx is still alive here");

println!(" main: About to call tokio::join! - will this deadlock?");

// This is the pattern from the original code

let _ = tokio::join!(stdin_task, processor_task, background_task);

}

```

---------

Co-authored-by: Michael Bolin <bolinfest@gmail.com>

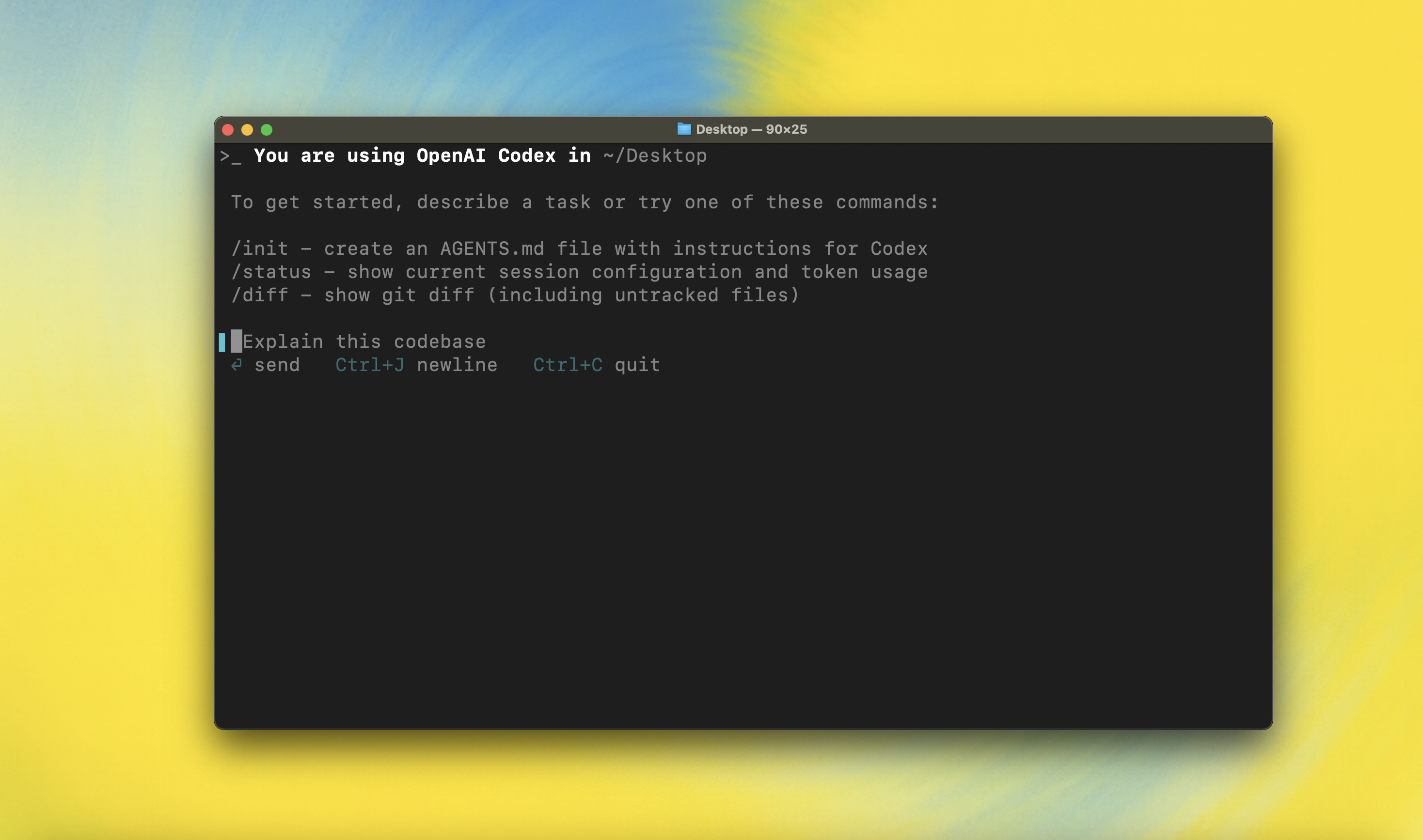

OpenAI Codex CLI

npm i -g @openai/codex

or brew install codex

Codex CLI is a coding agent from OpenAI that runs locally on your computer.

If you are looking for the cloud-based agent from OpenAI, Codex Web, see chatgpt.com/codex.

Quickstart

Installing and running Codex CLI

Install globally with your preferred package manager:

npm install -g @openai/codex # Alternatively: `brew install codex`

Then simply run codex to get started:

codex

You can also go to the latest GitHub Release and download the appropriate binary for your platform.

Each GitHub Release contains many executables, but in practice, you likely want one of these:

- macOS

- Apple Silicon/arm64:

codex-aarch64-apple-darwin.tar.gz - x86_64 (older Mac hardware):

codex-x86_64-apple-darwin.tar.gz

- Apple Silicon/arm64:

- Linux

- x86_64:

codex-x86_64-unknown-linux-musl.tar.gz - arm64:

codex-aarch64-unknown-linux-musl.tar.gz

- x86_64:

Each archive contains a single entry with the platform baked into the name (e.g., codex-x86_64-unknown-linux-musl), so you likely want to rename it to codex after extracting it.

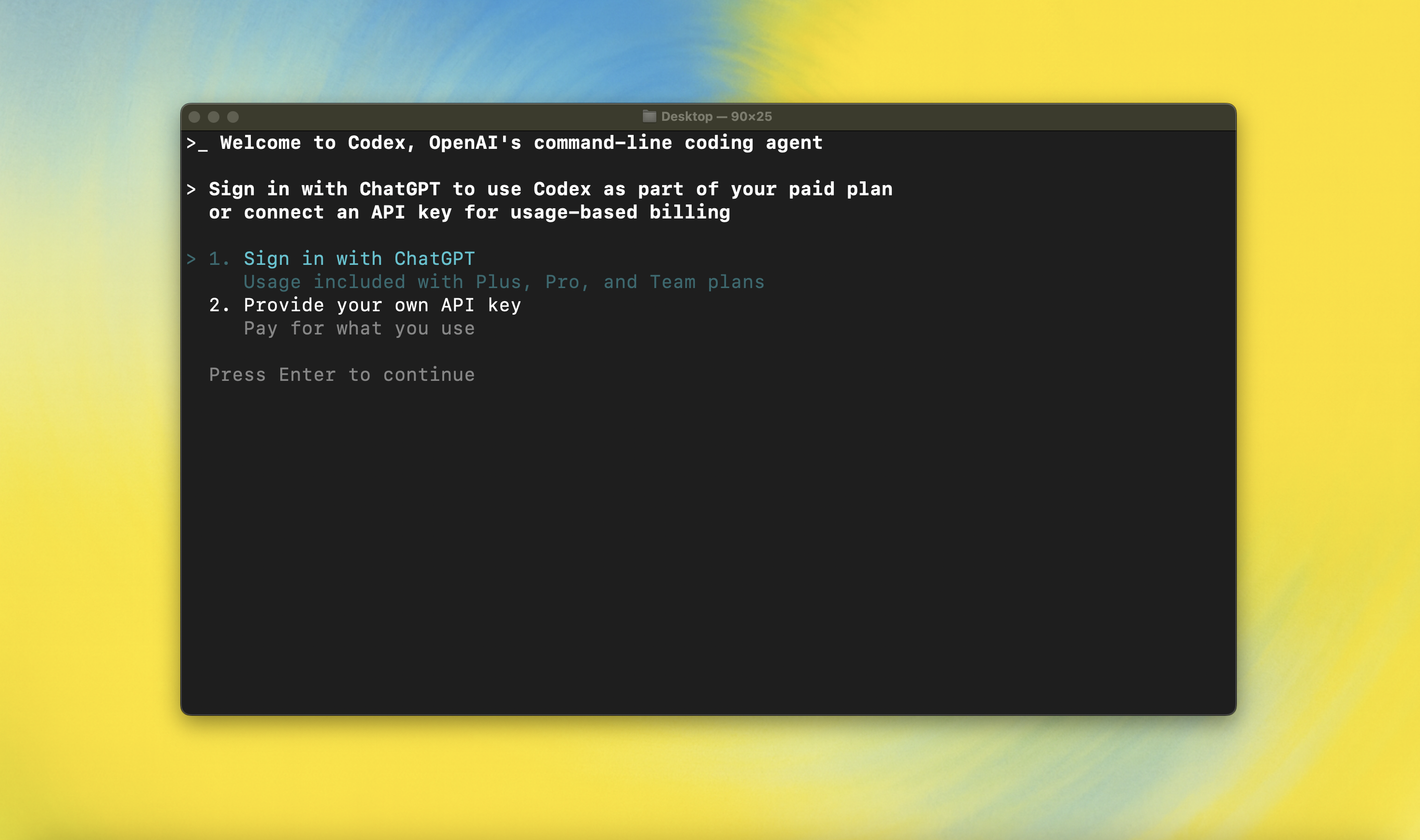

Using Codex with your ChatGPT plan

Run codex and select Sign in with ChatGPT. We recommend signing into your ChatGPT account to use Codex as part of your Plus, Pro, Team, Edu, or Enterprise plan. Learn more about what's included in your ChatGPT plan.

You can also use Codex with an API key, but this requires additional setup. If you previously used an API key for usage-based billing, see the migration steps. If you're having trouble with login, please comment on this issue.

Model Context Protocol (MCP)

Codex CLI supports MCP servers. Enable by adding an mcp_servers section to your ~/.codex/config.toml.

Configuration

Codex CLI supports a rich set of configuration options, with preferences stored in ~/.codex/config.toml. For full configuration options, see Configuration.

Docs & FAQ

- Getting started

- Sandbox & approvals

- Authentication

- Advanced

- Zero data retention (ZDR)

- Contributing

- Install & build

- FAQ

- Open source fund

License

This repository is licensed under the Apache-2.0 License.