**Typescript and JSON schema exports** While working on Thread/Turn/Items type definitions, I realize we will run into name conflicts between v1 and v2 APIs (e.g. `RateLimitWindow` which won't be reusable since v1 uses `RateLimitWindow` from `protocol/` which uses snake_case, but we want to expose camelCase everywhere, so we'll define a V2 version of that struct that serializes as camelCase). To set us up for a clean and isolated v2 API, generate types into a `v2/` namespace for both typescript and JSON schema. - TypeScript: v2 types emit under `out_dir/v2/*.ts`, and root index.ts now re-exports them via `export * as v2 from "./v2"`;. - JSON Schemas: v2 definitions bundle under `#/definitions/v2/*` rather than the root. The location for the original types (v1 and types pulled from `protocol/` and other core crates) haven't changed and are still at the root. This is for backwards compatibility: no breaking changes to existing usages of v1 APIs and types. **Notifications** While working on export.rs, I: - refactored server/client notifications with macros (like we already do for methods) so they also get exported (I noticed they weren't being exported at all). - removed the hardcoded list of types to export as JSON schema by leveraging the existing macros instead - and took a stab at API V2 notifications. These aren't wired up yet, and I expect to iterate on these this week.

npm i -g @openai/codex

or brew install --cask codex

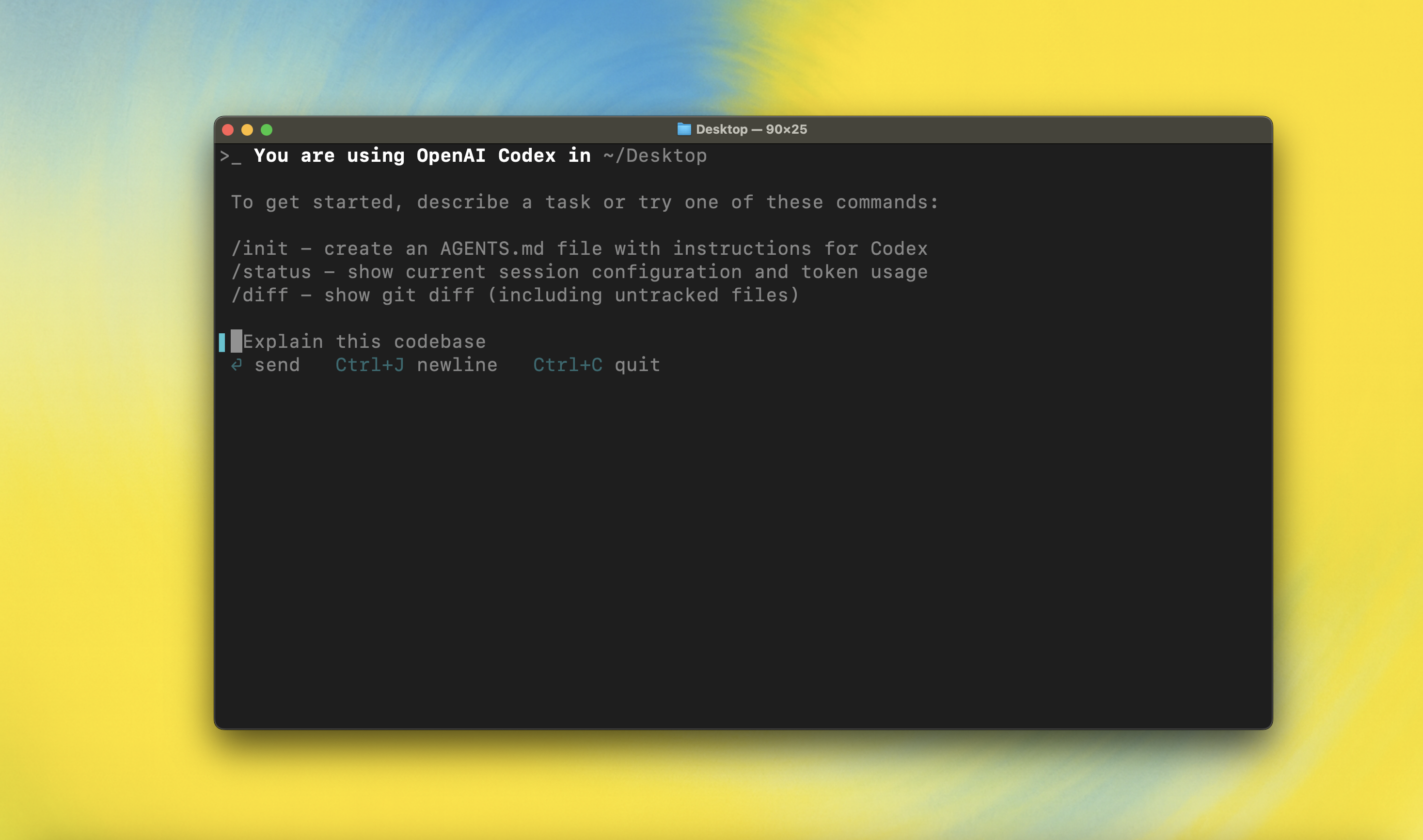

Codex CLI is a coding agent from OpenAI that runs locally on your computer.

If you want Codex in your code editor (VS Code, Cursor, Windsurf), install in your IDE

If you are looking for the cloud-based agent from OpenAI, Codex Web, go to chatgpt.com/codex

Quickstart

Installing and running Codex CLI

Install globally with your preferred package manager. If you use npm:

npm install -g @openai/codex

Alternatively, if you use Homebrew:

brew install --cask codex

Then simply run codex to get started:

codex

If you're running into upgrade issues with Homebrew, see the FAQ entry on brew upgrade codex.

You can also go to the latest GitHub Release and download the appropriate binary for your platform.

Each GitHub Release contains many executables, but in practice, you likely want one of these:

- macOS

- Apple Silicon/arm64:

codex-aarch64-apple-darwin.tar.gz - x86_64 (older Mac hardware):

codex-x86_64-apple-darwin.tar.gz

- Apple Silicon/arm64:

- Linux

- x86_64:

codex-x86_64-unknown-linux-musl.tar.gz - arm64:

codex-aarch64-unknown-linux-musl.tar.gz

- x86_64:

Each archive contains a single entry with the platform baked into the name (e.g., codex-x86_64-unknown-linux-musl), so you likely want to rename it to codex after extracting it.

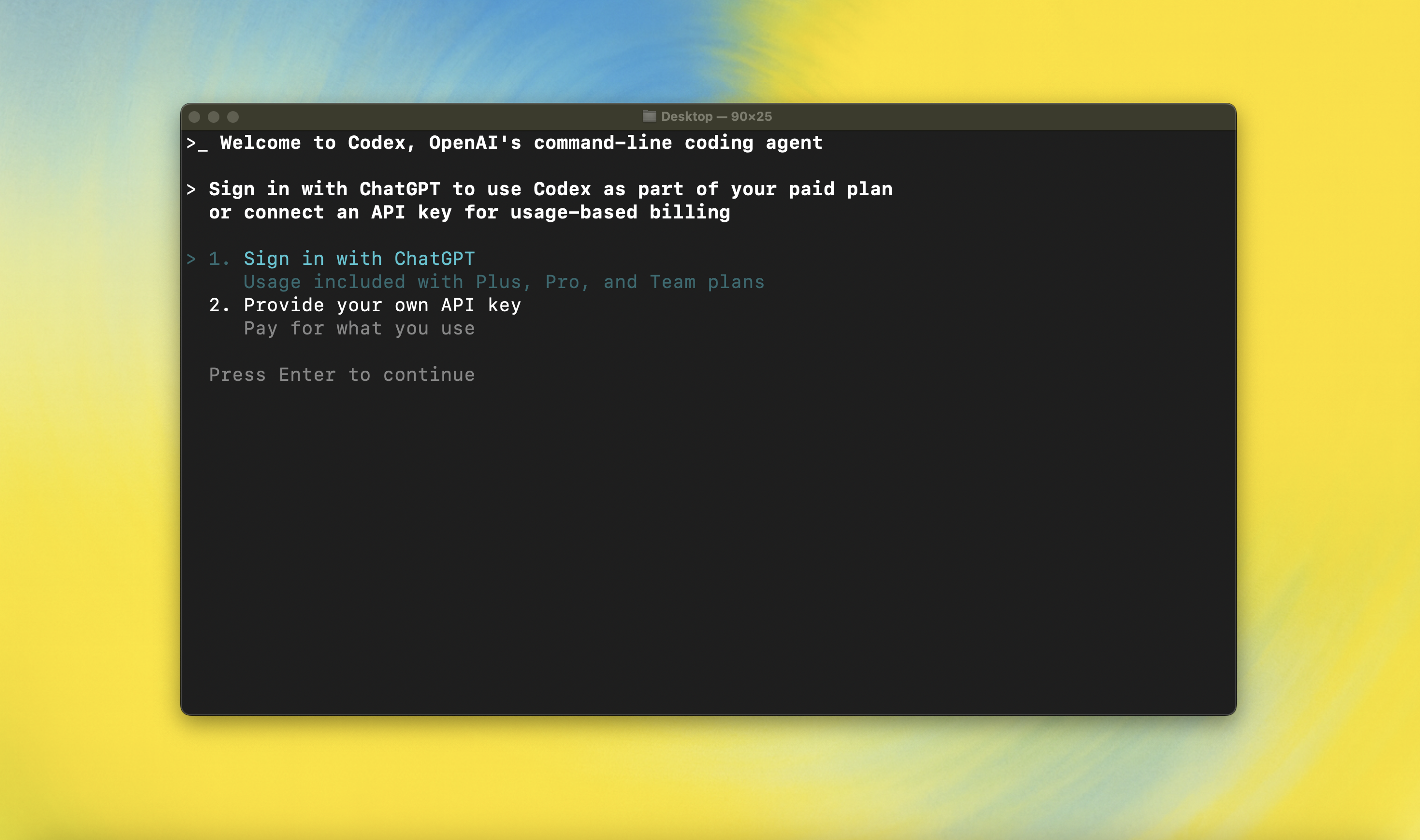

Using Codex with your ChatGPT plan

Run codex and select Sign in with ChatGPT. We recommend signing into your ChatGPT account to use Codex as part of your Plus, Pro, Team, Edu, or Enterprise plan. Learn more about what's included in your ChatGPT plan.

You can also use Codex with an API key, but this requires additional setup. If you previously used an API key for usage-based billing, see the migration steps. If you're having trouble with login, please comment on this issue.

Model Context Protocol (MCP)

Codex can access MCP servers. To configure them, refer to the config docs.

Configuration

Codex CLI supports a rich set of configuration options, with preferences stored in ~/.codex/config.toml. For full configuration options, see Configuration.

Docs & FAQ

- Getting started

- Configuration

- Sandbox & approvals

- Authentication

- Automating Codex

- Advanced

- Zero data retention (ZDR)

- Contributing

- Install & build

- FAQ

- Open source fund

License

This repository is licensed under the Apache-2.0 License.