Details are in `responses-api-proxy/README.md`, but the key contribution

of this PR is a new subcommand, `codex responses-api-proxy`, which reads

the auth token for use with the OpenAI Responses API from `stdin` at

startup and then proxies `POST` requests to `/v1/responses` over to

`https://api.openai.com/v1/responses`, injecting the auth token as part

of the `Authorization` header.

The expectation is that `codex responses-api-proxy` is launched by a

privileged user who has access to the auth token so that it can be used

by unprivileged users of the Codex CLI on the same host.

If the client only has one user account with `sudo`, one option is to:

- run `sudo codex responses-api-proxy --http-shutdown --server-info

/tmp/server-info.json` to start the server

- record the port written to `/tmp/server-info.json`

- relinquish their `sudo` privileges (which is irreversible!) like so:

```

sudo deluser $USER sudo || sudo gpasswd -d $USER sudo || true

```

- use `codex` with the proxy (see `README.md`)

- when done, make a `GET` request to the server using the `PORT` from

`server-info.json` to shut it down:

```shell

curl --fail --silent --show-error "http://127.0.0.1:$PORT/shutdown"

```

To protect the auth token, we:

- allocate a 1024 byte buffer on the stack and write `"Bearer "` into it

to start

- we then read from `stdin`, copying to the contents into the buffer

after the prefix

- after verifying the input looks good, we create a `String` from that

buffer (so the data is now on the heap)

- we zero out the stack-allocated buffer using

https://crates.io/crates/zeroize so it is not optimized away by the

compiler

- we invoke `.leak()` on the `String` so we can treat its contents as a

`&'static str`, as it will live for the rest of the processs

- on UNIX, we `mlock(2)` the memory backing the `&'static str`

- when using the `&'static str` when building an HTTP request, we use

`HeaderValue::from_static()` to avoid copying the `&str`

- we also invoke `.set_sensitive(true)` on the `HeaderValue`, which in

theory indicates to other parts of the HTTP stack that the header should

be treated with "special care" to avoid leakage:

439d1c50d7/src/header/value.rs (L346-L376)

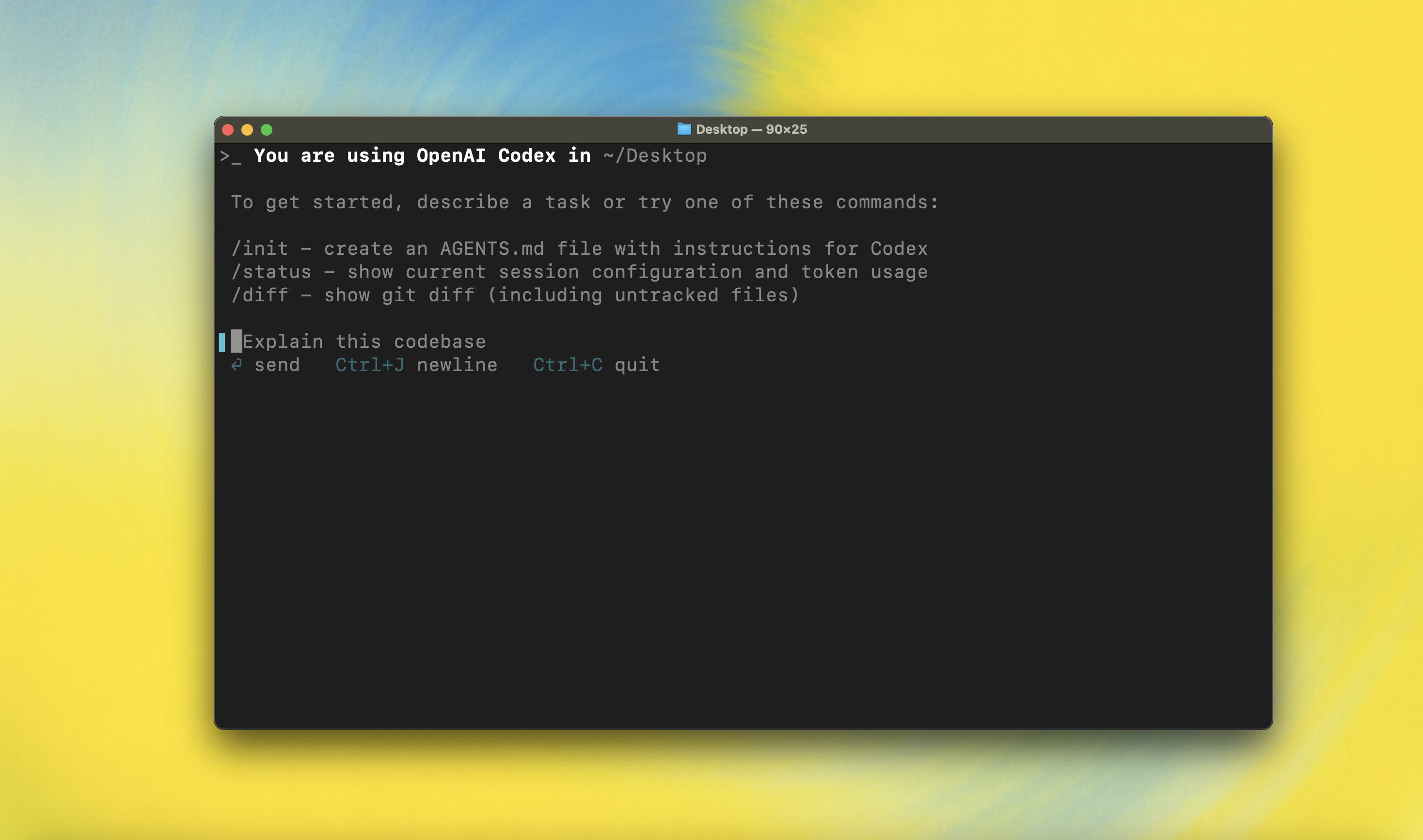

OpenAI Codex CLI

npm i -g @openai/codex

or brew install codex

Codex CLI is a coding agent from OpenAI that runs locally on your computer.

If you want Codex in your code editor (VS Code, Cursor, Windsurf), install in your IDE

If you are looking for the cloud-based agent from OpenAI, Codex Web, go to chatgpt.com/codex

Quickstart

Installing and running Codex CLI

Install globally with your preferred package manager. If you use npm:

npm install -g @openai/codex

Alternatively, if you use Homebrew:

brew install codex

Then simply run codex to get started:

codex

You can also go to the latest GitHub Release and download the appropriate binary for your platform.

Each GitHub Release contains many executables, but in practice, you likely want one of these:

- macOS

- Apple Silicon/arm64:

codex-aarch64-apple-darwin.tar.gz - x86_64 (older Mac hardware):

codex-x86_64-apple-darwin.tar.gz

- Apple Silicon/arm64:

- Linux

- x86_64:

codex-x86_64-unknown-linux-musl.tar.gz - arm64:

codex-aarch64-unknown-linux-musl.tar.gz

- x86_64:

Each archive contains a single entry with the platform baked into the name (e.g., codex-x86_64-unknown-linux-musl), so you likely want to rename it to codex after extracting it.

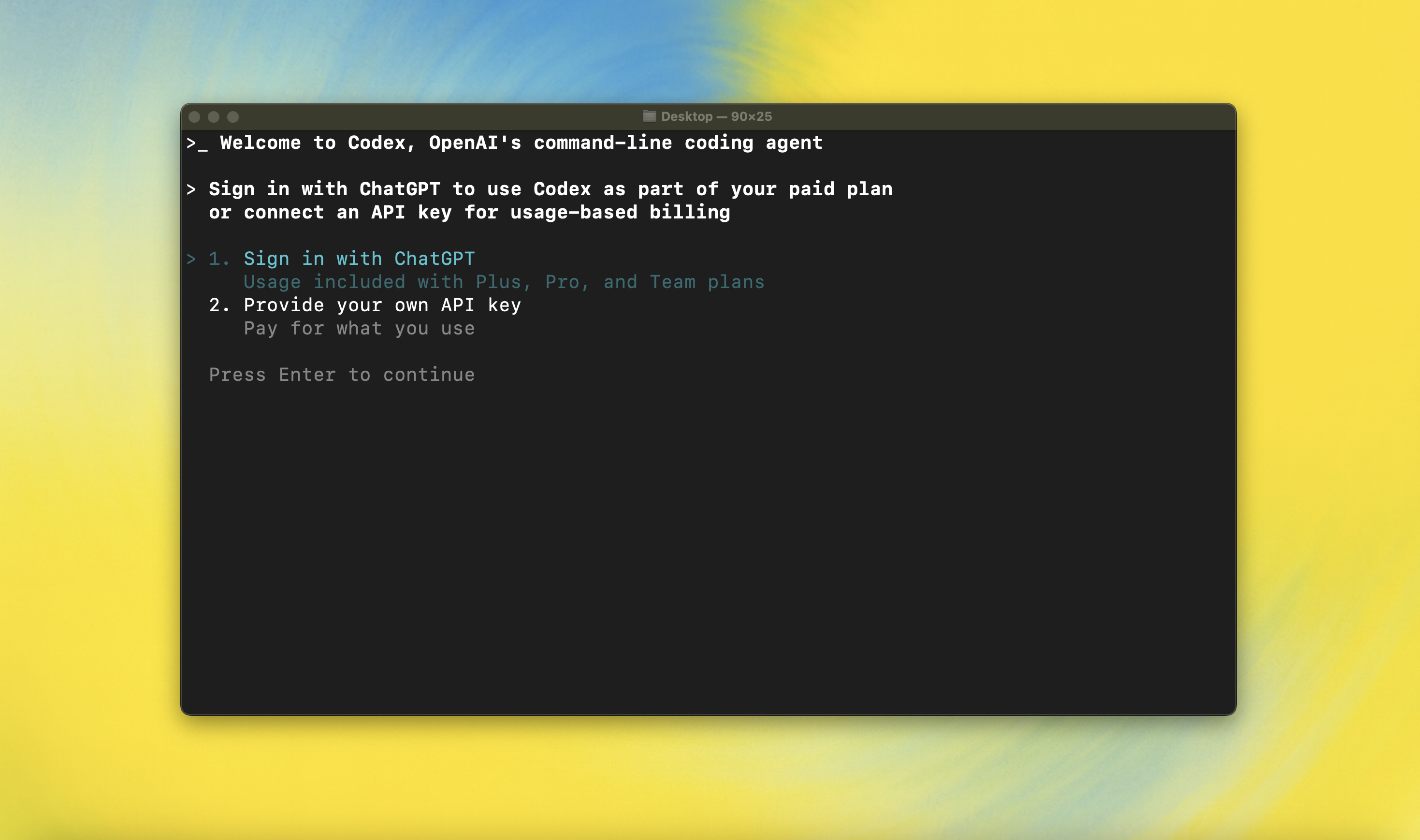

Using Codex with your ChatGPT plan

Run codex and select Sign in with ChatGPT. We recommend signing into your ChatGPT account to use Codex as part of your Plus, Pro, Team, Edu, or Enterprise plan. Learn more about what's included in your ChatGPT plan.

You can also use Codex with an API key, but this requires additional setup. If you previously used an API key for usage-based billing, see the migration steps. If you're having trouble with login, please comment on this issue.

Model Context Protocol (MCP)

Codex CLI supports MCP servers. Enable by adding an mcp_servers section to your ~/.codex/config.toml.

Configuration

Codex CLI supports a rich set of configuration options, with preferences stored in ~/.codex/config.toml. For full configuration options, see Configuration.

Docs & FAQ

- Getting started

- Sandbox & approvals

- Authentication

- Advanced

- Zero data retention (ZDR)

- Contributing

- Install & build

- FAQ

- Open source fund

License

This repository is licensed under the Apache-2.0 License.