Last week, I thought I found the smoking gun in our flaky integration tests where holding these locks could have led to potential deadlock: - https://github.com/openai/codex/pull/2876 - https://github.com/openai/codex/pull/2878 Yet even after those PRs went in, we continued to see flakinees in our integration tests! Though with the additional logging added as part of debugging those tests, I now saw things like: ``` read message from stdout: Notification(JSONRPCNotification { jsonrpc: "2.0", method: "codex/event/exec_approval_request", params: Some(Object {"id": String("0"), "msg": Object {"type": String("exec_approval_request"), "call_id": String("call1"), "command": Array [String("python3"), String("-c"), String("print(42)")], "cwd": String("/tmp/.tmpFj2zwi/workdir")}, "conversationId": String("c67b32c5-9475-41bf-8680-f4b4834ebcc6")}) }) notification: Notification(JSONRPCNotification { jsonrpc: "2.0", method: "codex/event/exec_approval_request", params: Some(Object {"id": String("0"), "msg": Object {"type": String("exec_approval_request"), "call_id": String("call1"), "command": Array [String("python3"), String("-c"), String("print(42)")], "cwd": String("/tmp/.tmpFj2zwi/workdir")}, "conversationId": String("c67b32c5-9475-41bf-8680-f4b4834ebcc6")}) }) read message from stdout: Request(JSONRPCRequest { id: Integer(0), jsonrpc: "2.0", method: "execCommandApproval", params: Some(Object {"conversation_id": String("c67b32c5-9475-41bf-8680-f4b4834ebcc6"), "call_id": String("call1"), "command": Array [String("python3"), String("-c"), String("print(42)")], "cwd": String("/tmp/.tmpFj2zwi/workdir")}) }) writing message to stdin: Response(JSONRPCResponse { id: Integer(0), jsonrpc: "2.0", result: Object {"decision": String("approved")} }) in read_stream_until_notification_message(codex/event/task_complete) [mcp stderr] 2025-09-04T00:00:59.738585Z INFO codex_mcp_server::message_processor: <- response: JSONRPCResponse { id: Integer(0), jsonrpc: "2.0", result: Object {"decision": String("approved")} } [mcp stderr] 2025-09-04T00:00:59.738740Z DEBUG codex_core::codex: Submission sub=Submission { id: "1", op: ExecApproval { id: "0", decision: Approved } } [mcp stderr] 2025-09-04T00:00:59.738832Z WARN codex_core::codex: No pending approval found for sub_id: 0 ``` That is, a response was sent for a request, but no callback was in place to handle the response! This time, I think I may have found the underlying issue (though the fixes for holding locks for too long may have also been part of it), which is I found cases where we were sending the request:234c0a0469/codex-rs/core/src/codex.rs (L597)before inserting the `Sender` into the `pending_approvals` map (which has to wait on acquiring a mutex):234c0a0469/codex-rs/core/src/codex.rs (L598-L601)so it is possible the request could go out and the client could respond before `pending_approvals` was updated! Note this was happening in both `request_command_approval()` and `request_patch_approval()`, which maps to the sorts of errors we have been seeing when these integration tests have been flaking on us. While here, I am also adding some extra logging that prints if inserting into `pending_approvals` overwrites an entry as opposed to purely inserting one. Today, a conversation can have only one pending request at a time, but as we are planning to support parallel tool calls, this invariant may not continue to hold, in which case we need to revisit this abstraction.

OpenAI Codex CLI

npm i -g @openai/codex

or brew install codex

Codex CLI is a coding agent from OpenAI that runs locally on your computer.

If you are looking for the cloud-based agent from OpenAI, Codex Web, see chatgpt.com/codex.

Quickstart

Installing and running Codex CLI

Install globally with your preferred package manager. If you use npm:

npm install -g @openai/codex

Alternatively, if you use Homebrew:

brew install codex

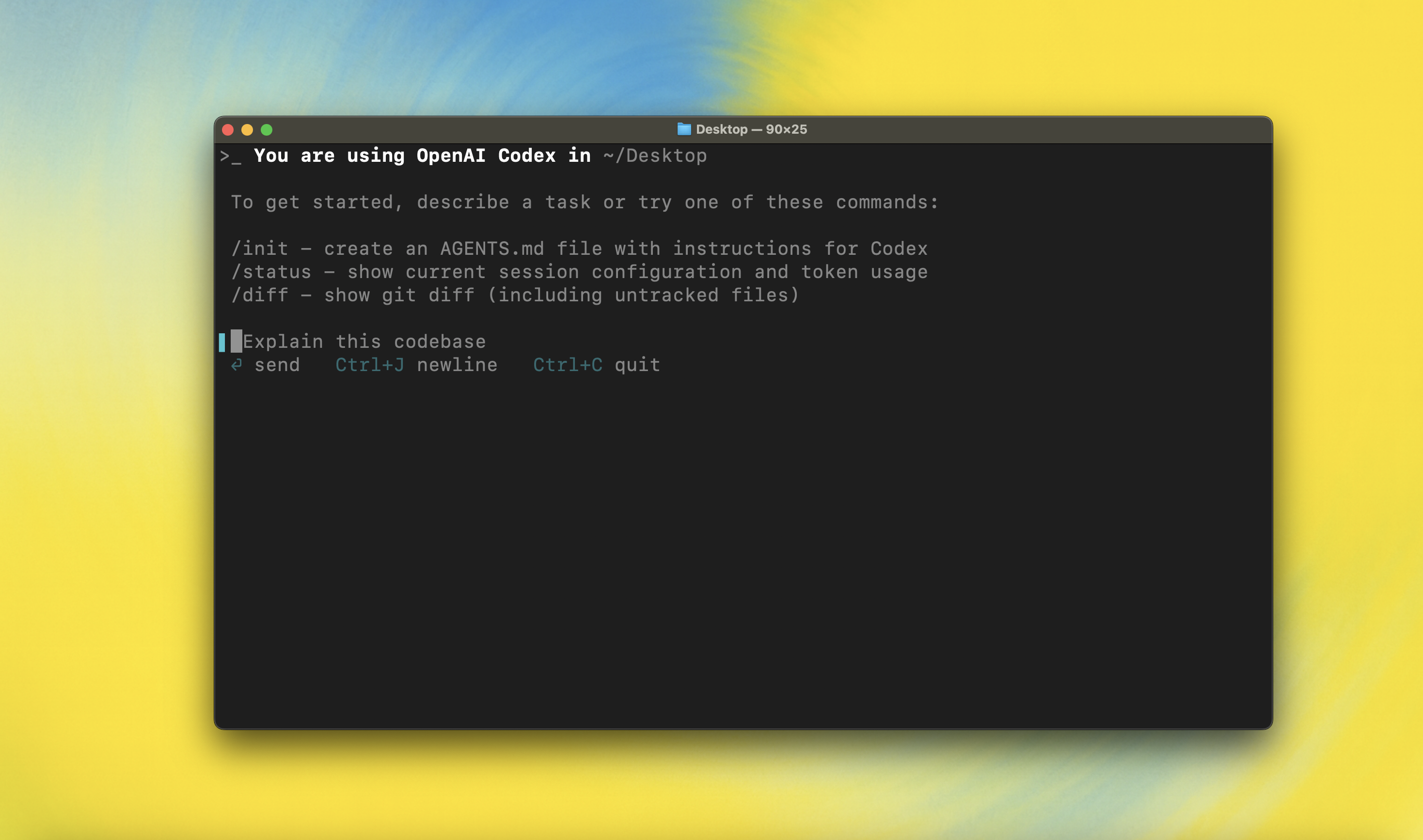

Then simply run codex to get started:

codex

You can also go to the latest GitHub Release and download the appropriate binary for your platform.

Each GitHub Release contains many executables, but in practice, you likely want one of these:

- macOS

- Apple Silicon/arm64:

codex-aarch64-apple-darwin.tar.gz - x86_64 (older Mac hardware):

codex-x86_64-apple-darwin.tar.gz

- Apple Silicon/arm64:

- Linux

- x86_64:

codex-x86_64-unknown-linux-musl.tar.gz - arm64:

codex-aarch64-unknown-linux-musl.tar.gz

- x86_64:

Each archive contains a single entry with the platform baked into the name (e.g., codex-x86_64-unknown-linux-musl), so you likely want to rename it to codex after extracting it.

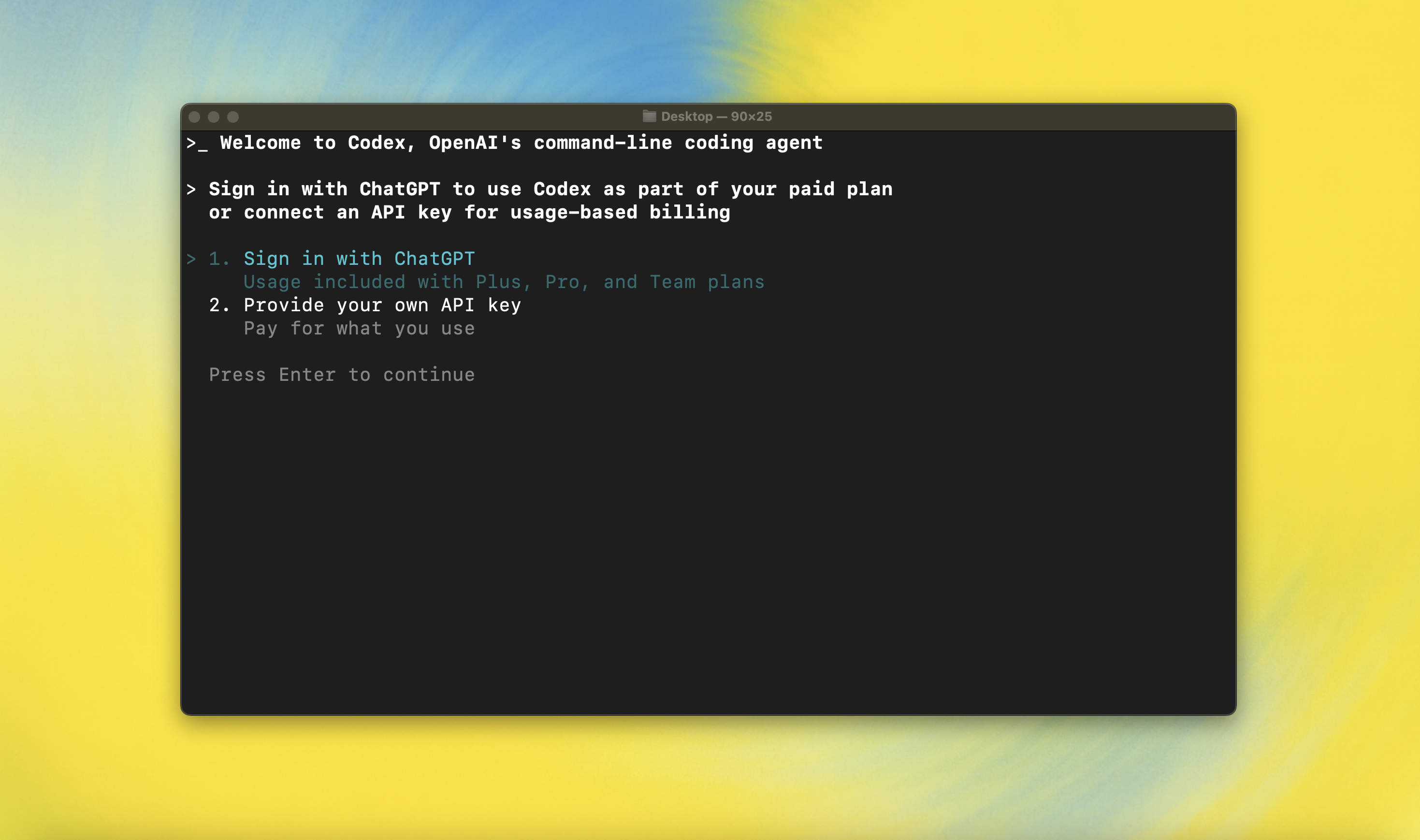

Using Codex with your ChatGPT plan

Run codex and select Sign in with ChatGPT. We recommend signing into your ChatGPT account to use Codex as part of your Plus, Pro, Team, Edu, or Enterprise plan. Learn more about what's included in your ChatGPT plan.

You can also use Codex with an API key, but this requires additional setup. If you previously used an API key for usage-based billing, see the migration steps. If you're having trouble with login, please comment on this issue.

Model Context Protocol (MCP)

Codex CLI supports MCP servers. Enable by adding an mcp_servers section to your ~/.codex/config.toml.

Configuration

Codex CLI supports a rich set of configuration options, with preferences stored in ~/.codex/config.toml. For full configuration options, see Configuration.

Docs & FAQ

- Getting started

- Sandbox & approvals

- Authentication

- Advanced

- Zero data retention (ZDR)

- Contributing

- Install & build

- FAQ

- Open source fund

License

This repository is licensed under the Apache-2.0 License.