this adds some more capabilities to the default sandbox which I feel are safe. Most are in the [renderer.sb](https://source.chromium.org/chromium/chromium/src/+/main:sandbox/policy/mac/renderer.sb) sandbox for chrome renderers, which i feel is fair game for codex commands. Specific changes: 1. Allow processes in the sandbox to send signals to any other process in the same sandbox (e.g. child processes or daemonized processes), instead of just themselves. 2. Allow user-preference-read 3. Allow process-info* to anything in the same sandbox. This is a bit wider than Chromium allows, but it seems OK to me to allow anything in the sandbox to get details about other processes in the same sandbox. Bazel uses these to e.g. wait for another process to exit. 4. Allow all CPU feature detection, this seems harmless to me. It's wider than Chromium, but Chromium is concerned about fingerprinting, and tightly controls what CPU features they actually care about, and we don't have either that restriction or that advantage. 5. Allow new sysctl-reads: ``` (sysctl-name "vm.loadavg") (sysctl-name-prefix "kern.proc.pgrp.") (sysctl-name-prefix "kern.proc.pid.") (sysctl-name-prefix "net.routetable.") ``` bazel needs these for waiting on child processes and for communicating with its local build server, i believe. I wonder if we should just allow all (sysctl-read), as reading any arbitrary info about the system seems fine to me. 6. Allow iokit-open on RootDomainUserClient. This has to do with power management I believe, and Chromium allows renderers to do this, so okay. Bazel needs it to boot successfully, possibly for sleep/wake callbacks? 7. Mach lookup to `com.apple.system.opendirectoryd.libinfo`, which has to do with user data, and which Chrome allows. 8. Mach lookup to `com.apple.PowerManagement.control`. Chromium allows its GPU process to do this, but not its renderers. Bazel needs this to boot, probably relatedly to sleep/wake stuff.

OpenAI Codex CLI

npm i -g @openai/codex

or brew install codex

Codex CLI is a coding agent from OpenAI that runs locally on your computer.

If you want Codex in your code editor (VS Code, Cursor, Windsurf), install in your IDE

If you are looking for the cloud-based agent from OpenAI, Codex Web, go to chatgpt.com/codex

Quickstart

Installing and running Codex CLI

Install globally with your preferred package manager. If you use npm:

npm install -g @openai/codex

Alternatively, if you use Homebrew:

brew install codex

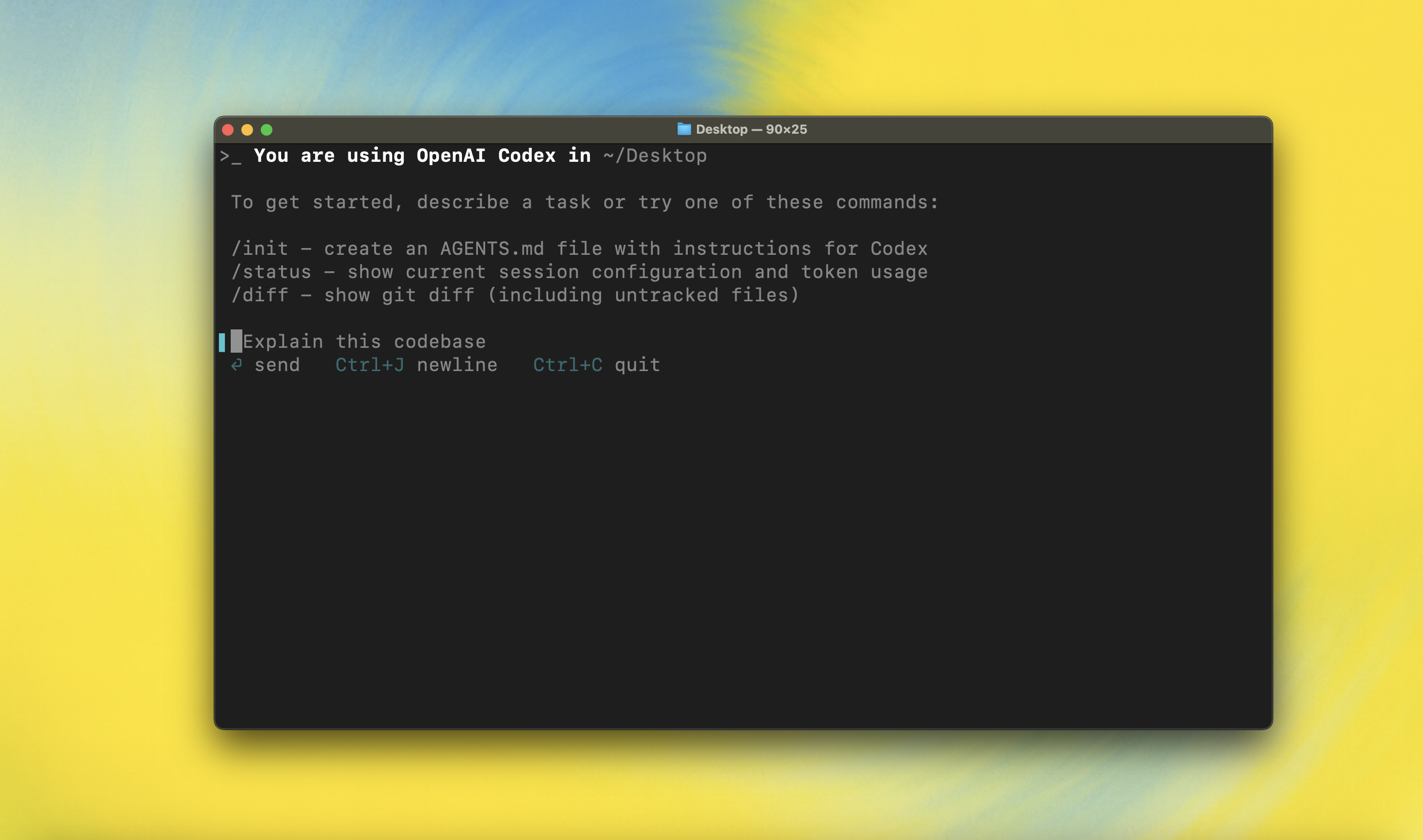

Then simply run codex to get started:

codex

You can also go to the latest GitHub Release and download the appropriate binary for your platform.

Each GitHub Release contains many executables, but in practice, you likely want one of these:

- macOS

- Apple Silicon/arm64:

codex-aarch64-apple-darwin.tar.gz - x86_64 (older Mac hardware):

codex-x86_64-apple-darwin.tar.gz

- Apple Silicon/arm64:

- Linux

- x86_64:

codex-x86_64-unknown-linux-musl.tar.gz - arm64:

codex-aarch64-unknown-linux-musl.tar.gz

- x86_64:

Each archive contains a single entry with the platform baked into the name (e.g., codex-x86_64-unknown-linux-musl), so you likely want to rename it to codex after extracting it.

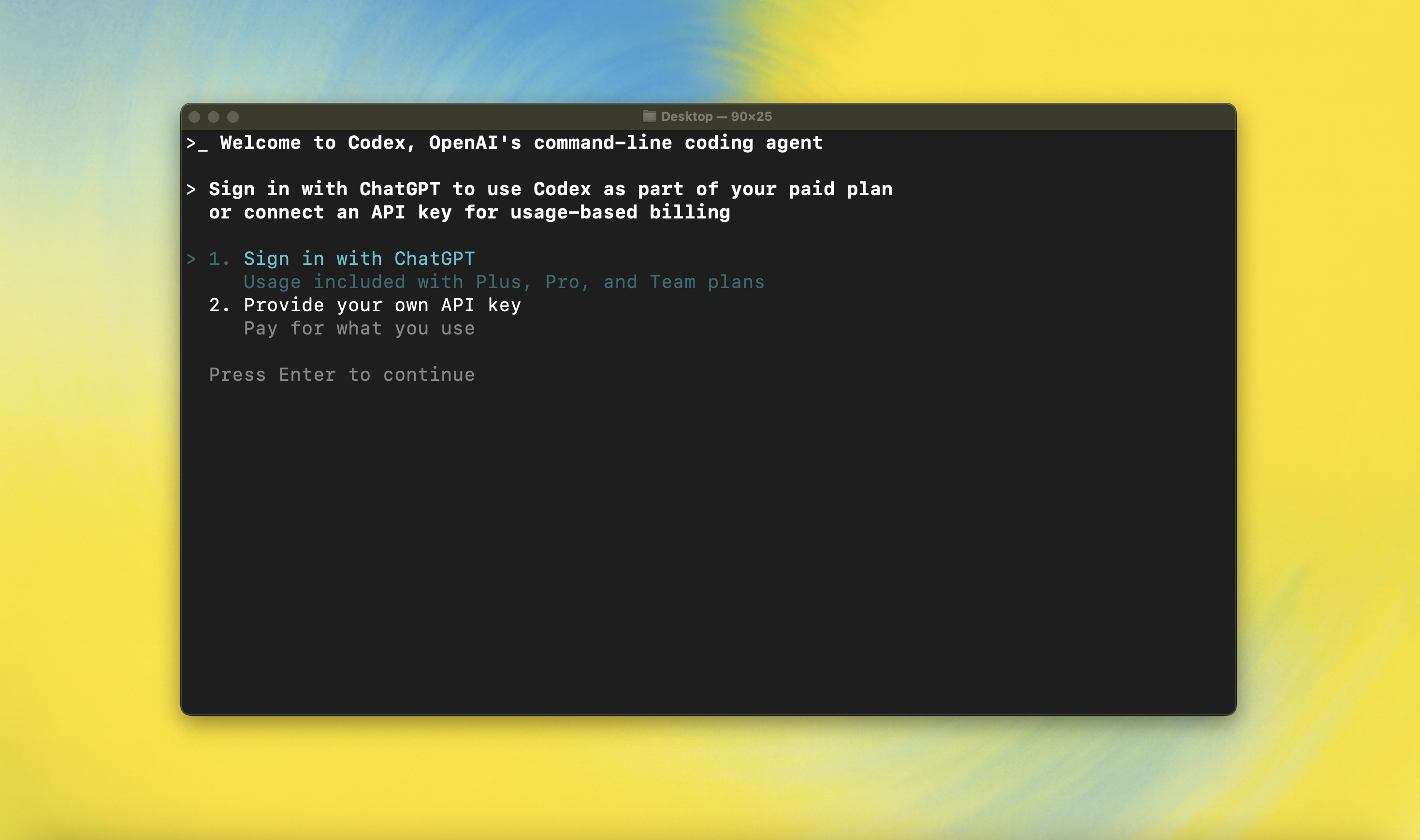

Using Codex with your ChatGPT plan

Run codex and select Sign in with ChatGPT. We recommend signing into your ChatGPT account to use Codex as part of your Plus, Pro, Team, Edu, or Enterprise plan. Learn more about what's included in your ChatGPT plan.

You can also use Codex with an API key, but this requires additional setup. If you previously used an API key for usage-based billing, see the migration steps. If you're having trouble with login, please comment on this issue.

Model Context Protocol (MCP)

Codex CLI supports MCP servers. Enable by adding an mcp_servers section to your ~/.codex/config.toml.

Configuration

Codex CLI supports a rich set of configuration options, with preferences stored in ~/.codex/config.toml. For full configuration options, see Configuration.

Docs & FAQ

- Getting started

- Sandbox & approvals

- Authentication

- Advanced

- Zero data retention (ZDR)

- Contributing

- Install & build

- FAQ

- Open source fund

License

This repository is licensed under the Apache-2.0 License.