feature: Add "!cmd" user shell execution

This change lets users run local shell commands directly from the TUI by

prefixing their input with ! (e.g. !ls). Output is truncated to keep the

exec cell usable, and Ctrl-C cleanly

interrupts long-running commands (e.g. !sleep 10000).

**Summary of changes**

- Route Op::RunUserShellCommand through a dedicated UserShellCommandTask

(core/src/tasks/user_shell.rs), keeping the task logic out of codex.rs.

- Reuse the existing tool router: the task constructs a ToolCall for the

local_shell tool and relies on ShellHandler, so no manual MCP tool

lookup is required.

- Emit exec lifecycle events (ExecCommandBegin/ExecCommandEnd) so the

TUI can show command metadata, live output, and exit status.

**End-to-end flow**

**TUI handling**

1. ChatWidget::submit_user_message (TUI) intercepts messages starting

with !.

2. Non-empty commands dispatch Op::RunUserShellCommand { command };

empty commands surface a help hint.

3. No UserInput items are created, so nothing is enqueued for the model.

**Core submission loop**

4. The submission loop routes the op to handlers::run_user_shell_command

(core/src/codex.rs).

5. A fresh TurnContext is created and Session::spawn_user_shell_command

enqueues UserShellCommandTask.

**Task execution**

6. UserShellCommandTask::run emits TaskStartedEvent, formats the

command, and prepares a ToolCall targeting local_shell.

7. ToolCallRuntime::handle_tool_call dispatches to ShellHandler.

**Shell tool runtime**

8. ShellHandler::run_exec_like launches the process via the unified exec

runtime, honoring sandbox and shell policies, and emits

ExecCommandBegin/End.

9. Stdout/stderr are captured for the UI, but the task does not turn the

resulting ToolOutput into a model response.

**Completion**

10. After ExecCommandEnd, the task finishes without an assistant

message; the session marks it complete and the exec cell displays the

final output.

**Conversation context**

- The command and its output never enter the conversation history or the

model prompt; the flow is local-only.

- Only exec/task events are emitted for UI rendering.

**Demo video**

https://github.com/user-attachments/assets/fcd114b0-4304-4448-a367-a04c43e0b996

npm i -g @openai/codex

or brew install --cask codex

Codex CLI is a coding agent from OpenAI that runs locally on your computer.

If you want Codex in your code editor (VS Code, Cursor, Windsurf), install in your IDE

If you are looking for the cloud-based agent from OpenAI, Codex Web, go to chatgpt.com/codex

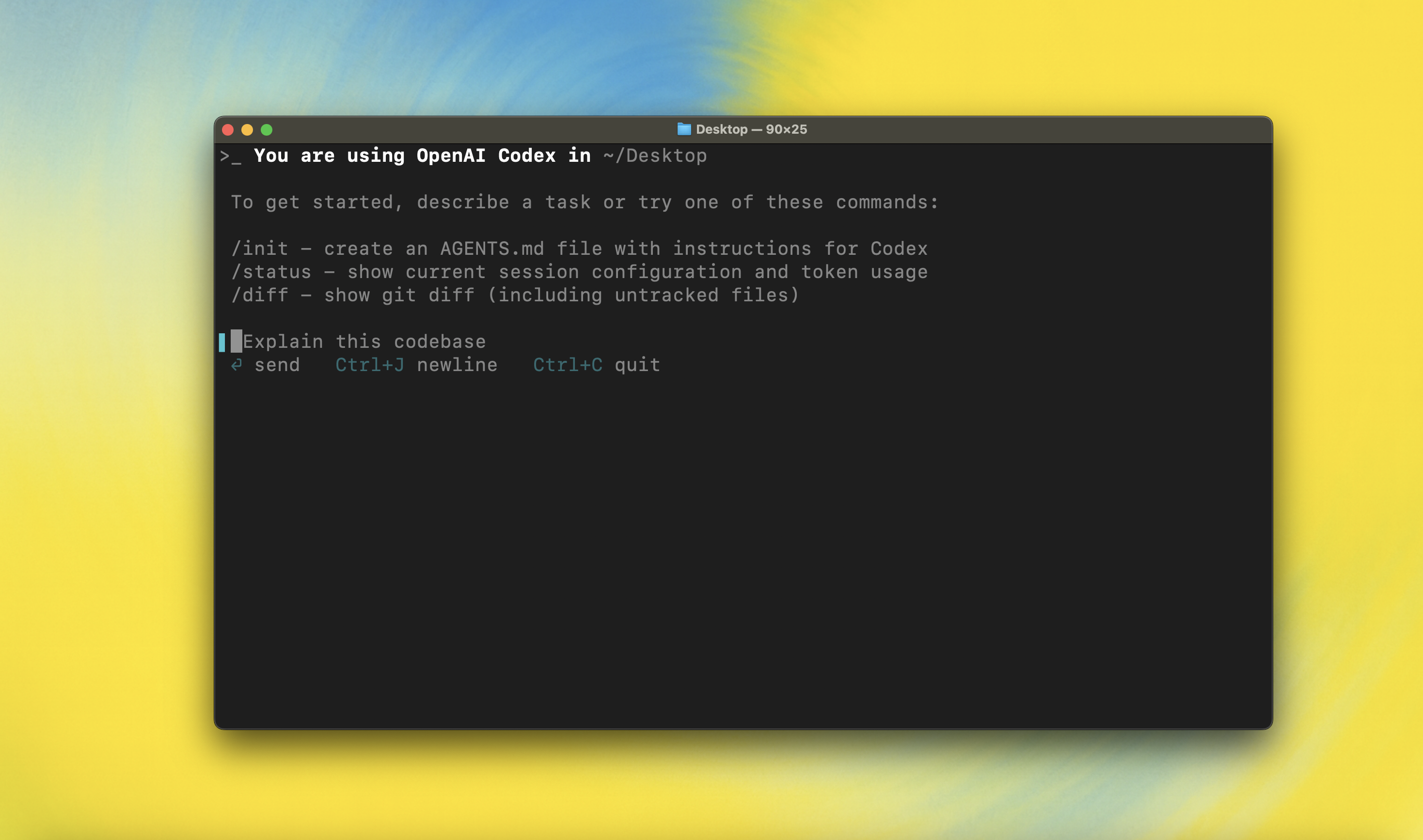

Quickstart

Installing and running Codex CLI

Install globally with your preferred package manager. If you use npm:

npm install -g @openai/codex

Alternatively, if you use Homebrew:

brew install --cask codex

Then simply run codex to get started:

codex

If you're running into upgrade issues with Homebrew, see the FAQ entry on brew upgrade codex.

You can also go to the latest GitHub Release and download the appropriate binary for your platform.

Each GitHub Release contains many executables, but in practice, you likely want one of these:

- macOS

- Apple Silicon/arm64:

codex-aarch64-apple-darwin.tar.gz - x86_64 (older Mac hardware):

codex-x86_64-apple-darwin.tar.gz

- Apple Silicon/arm64:

- Linux

- x86_64:

codex-x86_64-unknown-linux-musl.tar.gz - arm64:

codex-aarch64-unknown-linux-musl.tar.gz

- x86_64:

Each archive contains a single entry with the platform baked into the name (e.g., codex-x86_64-unknown-linux-musl), so you likely want to rename it to codex after extracting it.

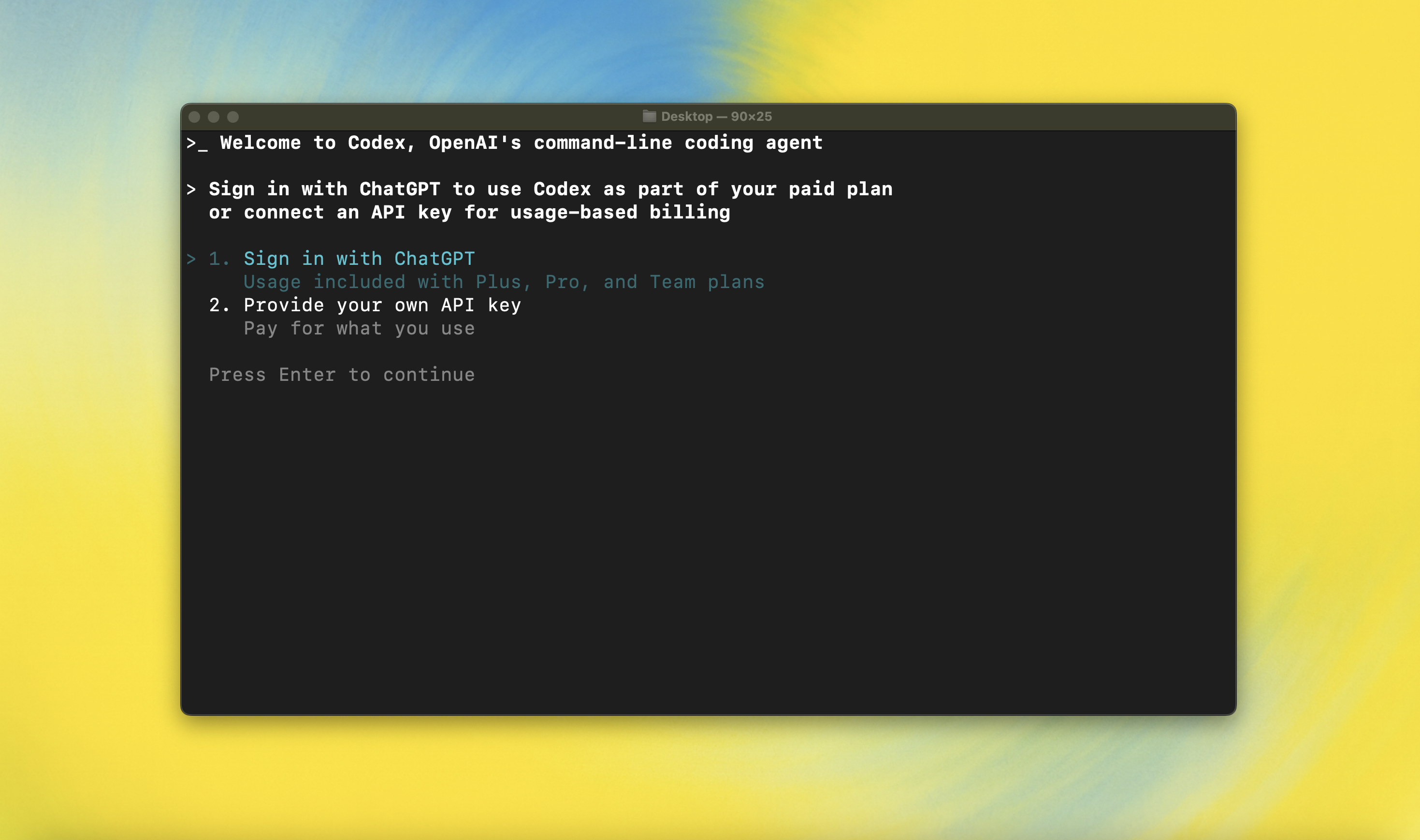

Using Codex with your ChatGPT plan

Run codex and select Sign in with ChatGPT. We recommend signing into your ChatGPT account to use Codex as part of your Plus, Pro, Team, Edu, or Enterprise plan. Learn more about what's included in your ChatGPT plan.

You can also use Codex with an API key, but this requires additional setup. If you previously used an API key for usage-based billing, see the migration steps. If you're having trouble with login, please comment on this issue.

Model Context Protocol (MCP)

Codex can access MCP servers. To configure them, refer to the config docs.

Configuration

Codex CLI supports a rich set of configuration options, with preferences stored in ~/.codex/config.toml. For full configuration options, see Configuration.

Docs & FAQ

- Getting started

- Sandbox & approvals

- Authentication

- Automating Codex

- Advanced

- Zero data retention (ZDR)

- Contributing

- Install & build

- FAQ

- Open source fund

License

This repository is licensed under the Apache-2.0 License.