## Current State Observations - `Session` currently holds many unrelated responsibilities (history, approval queues, task handles, rollout recorder, shell discovery, token tracking, etc.), making it hard to reason about ownership and lifetimes. - The anonymous `State` struct inside `codex.rs` mixes session-long data with turn-scoped queues and approval bookkeeping. - Turn execution (`run_task`) relies on ad-hoc local variables that should conceptually belong to a per-turn state object. - External modules (`codex::compact`, tests) frequently poke the raw `Session.state` mutex, which couples them to implementation details. - Interrupts, approvals, and rollout persistence all have bespoke cleanup paths, contributing to subtle bugs when a turn is aborted mid-flight. ## Desired End State - Keep a slim `Session` object that acts as the orchestrator and façade. It should expose a focused API (submit, approvals, interrupts, event emission) without storing unrelated fields directly. - Introduce a `state` module that encapsulates all mutable data structures: - `SessionState`: session-persistent data (history, approved commands, token/rate-limit info, maybe user preferences). - `ActiveTurn`: metadata for the currently running turn (sub-id, task kind, abort handle) and an `Arc<TurnState>`. - `TurnState`: all turn-scoped pieces (pending inputs, approval waiters, diff tracker, review history, auto-compact flags, last agent message, outstanding tool call bookkeeping). - Group long-lived helpers/managers into a dedicated `SessionServices` struct so `Session` does not accumulate "random" fields. - Provide clear, lock-safe APIs so other modules never touch raw mutexes. - Ensure every turn creates/drops a `TurnState` and that interrupts/finishes delegate cleanup to it.

OpenAI Codex CLI

npm i -g @openai/codex

or brew install codex

Codex CLI is a coding agent from OpenAI that runs locally on your computer.

If you want Codex in your code editor (VS Code, Cursor, Windsurf), install in your IDE

If you are looking for the cloud-based agent from OpenAI, Codex Web, go to chatgpt.com/codex

Quickstart

Installing and running Codex CLI

Install globally with your preferred package manager. If you use npm:

npm install -g @openai/codex

Alternatively, if you use Homebrew:

brew install codex

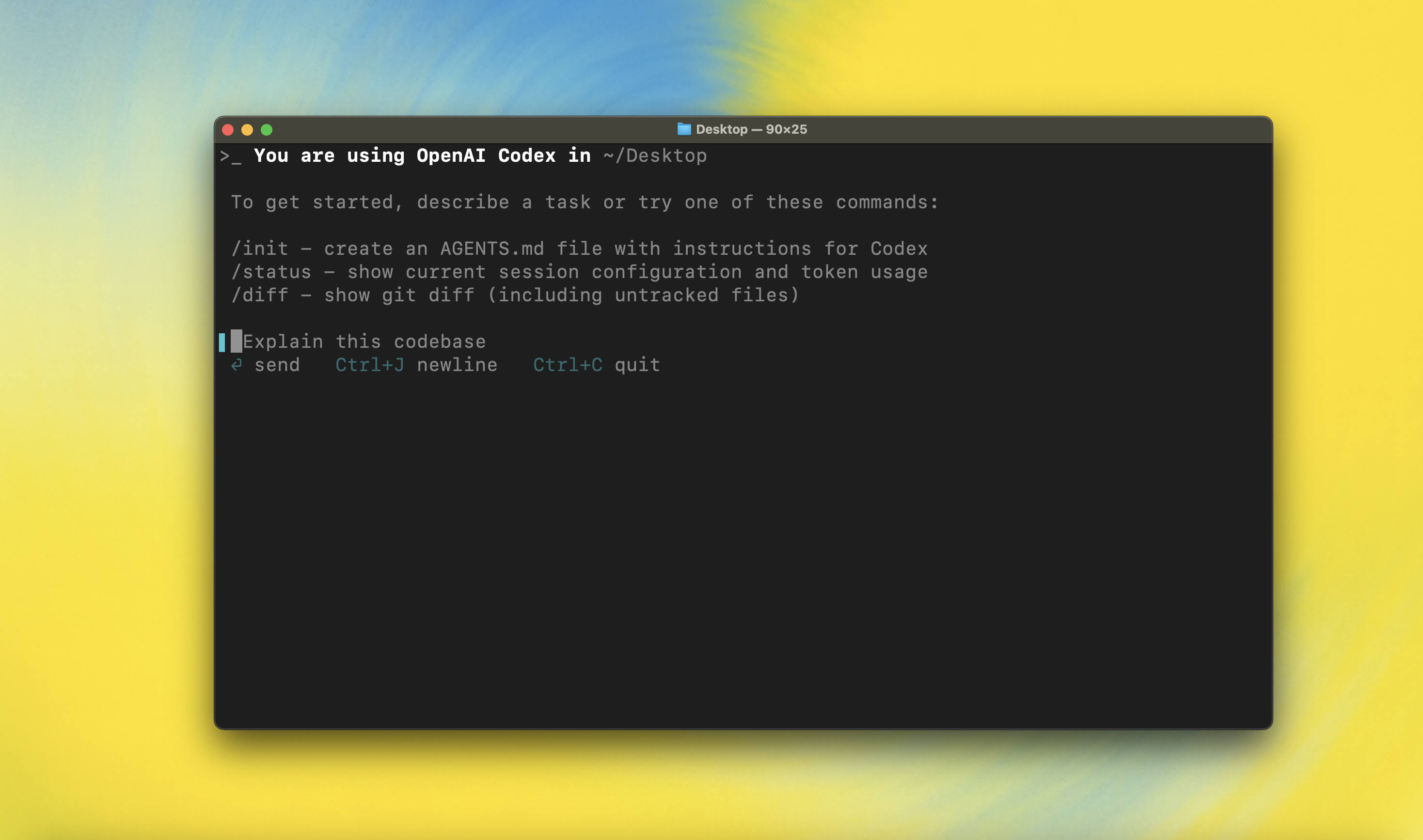

Then simply run codex to get started:

codex

You can also go to the latest GitHub Release and download the appropriate binary for your platform.

Each GitHub Release contains many executables, but in practice, you likely want one of these:

- macOS

- Apple Silicon/arm64:

codex-aarch64-apple-darwin.tar.gz - x86_64 (older Mac hardware):

codex-x86_64-apple-darwin.tar.gz

- Apple Silicon/arm64:

- Linux

- x86_64:

codex-x86_64-unknown-linux-musl.tar.gz - arm64:

codex-aarch64-unknown-linux-musl.tar.gz

- x86_64:

Each archive contains a single entry with the platform baked into the name (e.g., codex-x86_64-unknown-linux-musl), so you likely want to rename it to codex after extracting it.

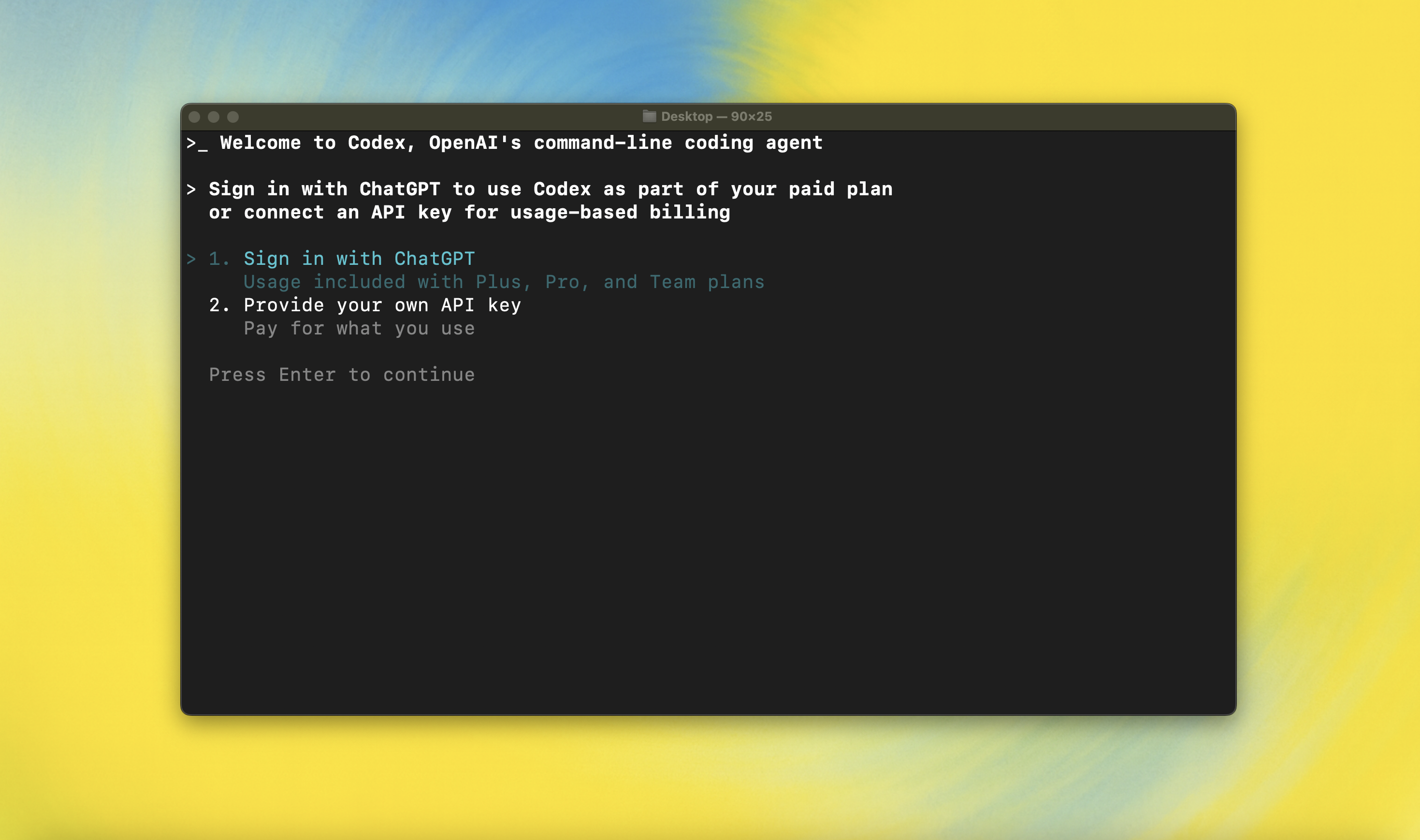

Using Codex with your ChatGPT plan

Run codex and select Sign in with ChatGPT. We recommend signing into your ChatGPT account to use Codex as part of your Plus, Pro, Team, Edu, or Enterprise plan. Learn more about what's included in your ChatGPT plan.

You can also use Codex with an API key, but this requires additional setup. If you previously used an API key for usage-based billing, see the migration steps. If you're having trouble with login, please comment on this issue.

Model Context Protocol (MCP)

Codex CLI supports MCP servers. Enable by adding an mcp_servers section to your ~/.codex/config.toml.

Configuration

Codex CLI supports a rich set of configuration options, with preferences stored in ~/.codex/config.toml. For full configuration options, see Configuration.

Docs & FAQ

- Getting started

- Sandbox & approvals

- Authentication

- Advanced

- Zero data retention (ZDR)

- Contributing

- Install & build

- FAQ

- Open source fund

License

This repository is licensed under the Apache-2.0 License.