While creating a basic MCP server in

https://github.com/openai/codex/pull/792, I discovered a number of bugs

with the initial `mcp-types` crate that I needed to fix in order to

implement the server.

For example, I discovered that when serializing a message, `"jsonrpc":

"2.0"` was not being included.

I changed the codegen so that the field is added as:

```rust

#[serde(rename = "jsonrpc", default = "default_jsonrpc")]

pub jsonrpc: String,

```

This ensures that the field is serialized as `"2.0"`, though the field

still has to be assigned, which is tedious. I may experiment with

`Default` or something else in the future. (I also considered creating a

custom serializer, but I'm not sure it's worth the trouble.)

While here, I also added `MCP_SCHEMA_VERSION` and `JSONRPC_VERSION` as

`pub const`s for the crate.

I also discovered that MCP rejects sending `null` for optional fields,

so I had to add `#[serde(skip_serializing_if = "Option::is_none")]` on

`Option` fields.

---

[//]: # (BEGIN SAPLING FOOTER)

Stack created with [Sapling](https://sapling-scm.com). Best reviewed

with [ReviewStack](https://reviewstack.dev/openai/codex/pull/791).

* #792

* __->__ #791

This adds our own `mcp-types` crate to our Cargo workspace. We vendor in

the

[`2025-03-26/schema.json`](05f2045136/schema/2025-03-26/schema.json)

from the MCP repo and introduce a `generate_mcp_types.py` script to

codegen the `lib.rs` from the JSON schema.

Test coverage is currently light, but I plan to refine things as we

start making use of this crate.

And yes, I am aware that

https://github.com/modelcontextprotocol/rust-sdk exists, though the

published https://crates.io/crates/rmcp appears to be a competing

effort. While things are up in the air, it seems better for us to

control our own version of this code.

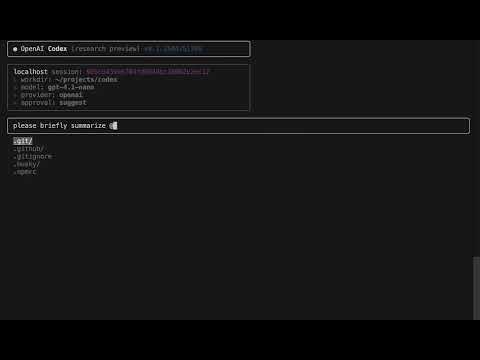

Incidentally, Codex did a lot of the work for this PR. I told it to

never edit `lib.rs` directly and instead to update

`generate_mcp_types.py` and then re-run it to update `lib.rs`. It

followed these instructions and once things were working end-to-end, I

iteratively asked for changes to the tests until the API looked

reasonable (and the code worked). Codex was responsible for figuring out

what to do to `generate_mcp_types.py` to achieve the requested test/API

changes.

Building on top of https://github.com/openai/codex/pull/757, this PR

updates Codex to use the Landlock executor binary for sandboxing in the

Node.js CLI. Note that Codex has to be invoked with either `--full-auto`

or `--auto-edit` to activate sandboxing. (Using `--suggest` or

`--dangerously-auto-approve-everything` ensures the sandboxing codepath

will not be exercised.)

When I tested this on a Linux host (specifically, `Ubuntu 24.04.1 LTS`),

things worked as expected: I ran Codex CLI with `--full-auto` and then

asked it to do `echo 'hello mbolin' into hello_world.txt` and it

succeeded without prompting me.

However, in my testing, I discovered that the sandboxing did *not* work

when using `--full-auto` in a Linux Docker container from a macOS host.

I updated the code to throw a detailed error message when this happens:

This introduces `./codex-cli/scripts/stage_release.sh`, which is a shell

script that stages a release for the Node.js module in a temp directory.

It updates the release to include these native binaries:

```

bin/codex-linux-sandbox-arm64

bin/codex-linux-sandbox-x64

```

though this PR does not update Codex CLI to use them yet.

When doing local development, run

`./codex-cli/scripts/install_native_deps.sh` to install these in your

own `bin/` folder.

This PR also updates `README.md` to document the new workflow.

---

[//]: # (BEGIN SAPLING FOOTER)

Stack created with [Sapling](https://sapling-scm.com). Best reviewed

with [ReviewStack](https://reviewstack.dev/openai/codex/pull/757).

* #763

* __->__ #757

## `0.1.2504301751`

### 🚀 Features

- User config api key (#569)

- `@mention` files in codex (#701)

- Add `--reasoning` CLI flag (#314)

- Lower default retry wait time and increase number of tries (#720)

- Add common package registries domains to allowed-domains list (#414)

### 🪲 Bug Fixes

- Insufficient quota message (#758)

- Input keyboard shortcut opt+delete (#685)

- `/diff` should include untracked files (#686)

- Only allow running without sandbox if explicitly marked in safe

container (#699)

- Tighten up check for /usr/bin/sandbox-exec (#710)

- Check if sandbox-exec is available (#696)

- Duplicate messages in quiet mode (#680)

Solves #700

## State of the World Before

Prior to this PR, when users wanted to share file contents with Codex,

they had two options:

- Manually copy and paste file contents into the chat

- Wait for the assistant to use the shell tool to view the file

The second approach required the assistant to:

1. Recognize the need to view a file

2. Execute a shell tool call

3. Wait for the tool call to complete

4. Process the file contents

This consumed extra tokens and reduced user control over which files

were shared with the model.

## State of the World After

With this PR, users can now:

- Reference files directly in their chat input using the `@path` syntax

- Have file contents automatically expanded into XML blocks before being

sent to the LLM

For example, users can type `@src/utils/config.js` in their message, and

the file contents will be included in context. Within the terminal chat

history, these file blocks will be collapsed back to `@path` format in

the UI for clean presentation.

Tag File suggestions:

<img width="857" alt="file-suggestions"

src="https://github.com/user-attachments/assets/397669dc-ad83-492d-b5f0-164fab2ff4ba"

/>

Tagging files in action:

<img width="858" alt="tagging-files"

src="https://github.com/user-attachments/assets/0de9d559-7b7f-4916-aeff-87ae9b16550a"

/>

Demo video of file tagging:

[](https://www.youtube.com/watch?v=vL4LqtBnqt8)

## Implementation Details

This PR consists of 2 main components:

1. **File Tag Utilities**:

- New `file-tag-utils.ts` utility module that handles both expansion and

collapsing of file tags

- `expandFileTags()` identifies `@path` tokens and replaces them with

XML blocks containing file contents

- `collapseXmlBlocks()` reverses the process, converting XML blocks back

to `@path` format for UI display

- Tokens are only expanded if they point to valid files (directories are

ignored)

- Expansion happens just before sending input to the model

2. **Terminal Chat Integration**:

- Leveraged the existing file system completion system for tabbing to

support the `@path` syntax

- Added `updateFsSuggestions` helper to manage filesystem suggestions

- Added `replaceFileSystemSuggestion` to replace input with filesystem

suggestions

- Applied `collapseXmlBlocks` in the chat response rendering so that

tagged files are shown as simple `@path` tags

The PR also includes test coverage for both the UI and the file tag

utilities.

## Next Steps

Some ideas I'd like to implement if this feature gets merged:

- Line selection: `@path[50:80]` to grab specific sections of files

- Method selection: `@path#methodName` to grab just one function/class

- Visual improvements: highlight file tags in the UI to make them more

noticeable

This pull request includes a change to improve the error message

displayed when there is insufficient quota in the `AgentLoop` class. The

updated message provides more detailed information and a link for

managing or purchasing credits.

Error message improvement:

*

[`codex-cli/src/utils/agent/agent-loop.ts`](diffhunk://#diff-b15957eac2720c3f1f55aa32f172cdd0ac6969caf4e7be87983df747a9f97083L1140-R1140):

Updated the error message in the `AgentLoop` class to include the

specific error message (if available) and a link to manage or purchase

credits.

Fixes#751

I suspect this was done originally so that `execForSandbox()` had a

consistent signature for both the `SandboxType.NONE` and

`SandboxType.MACOS_SEATBELT` cases, but that is not really necessary and

turns out to make the upcoming Landlock support a bit more complicated

to implement, so I had Codex remove it and clean up the call sites.

Apparently the URLs for draft releases cannot be downloaded using

unauthenticated `curl`, which means the DotSlash file only works for

users who are authenticated with `gh`. According to chat, prereleases

_can_ be fetched with unauthenticated `curl`, so let's try that.

For now, keep things simple such that we never update the `version` in

the `Cargo.toml` for the workspace root on the `main` branch. Instead,

create a new branch for a release, push one commit that updates the

`version`, and then tag that branch to kick off a release.

To test, I ran this script and created this release job:

https://github.com/openai/codex/actions/runs/14762580641

The generated DotSlash file has URLs that refer to

`https://github.com/openai/codex/releases/`, so let's set

`prerelease:false` (but keep `draft:true` for now) so those URLs should

work.

Also updated `version` in Cargo workspace so I will kick off a build

once this lands.

I am working to simplify the build process. As a first step, update

`session.ts` so it reads the `version` from `package.json` at runtime so

we no longer have to modify it during the build process. I want to get

to a place where the build looks like:

```

cd codex-cli

pnpm i

pnpm build

RELEASE_DIR=$(mktemp -d)

cp -r bin "$RELEASE_DIR/bin"

cp -r dist "$RELEASE_DIR/dist"

cp -r src "$RELEASE_DIR/src" # important if we want sourcemaps to continue to work

cp ../README.md "$RELEASE_DIR"

VERSION=$(printf '0.1.%d' $(date +%y%m%d%H%M))

jq --arg version "$VERSION" '.version = $version' package.json > "$RELEASE_DIR/package.json"

```

Then the contents of `$RELEASE_DIR` should be good to `npm publish`, no?

@oai-ragona and I discussed it, and we feel the REPL crate has served

its purpose, so we're going to delete the code and future archaeologists

can find it in Git history.

Apparently I made two key mistakes in

https://github.com/openai/codex/pull/740 (fixed in this PR):

* I forgot to redefine `$dest` in the `Stage Linux-only artifacts` step

* I did not define the `if` check correctly in the `Stage Linux-only

artifacts` step

This fixes both of those issues and bumps the workspace version to

`0.0.2504292006` in preparation for another release attempt.

This introduces a standalone executable that run the equivalent of the

`codex debug landlock` subcommand and updates `rust-release.yml` to

include it in the release.

The idea is that we will include this small binary with the TypeScript

CLI to provide support for Linux sandboxing.

Taking a pass at building artifacts per platform so we can consider

different distribution strategies that don't require users to install

the full `cargo` toolchain.

Right now this grabs just the `codex-repl` and `codex-tui` bins for 5

different targets and bundles them into a draft release. I think a

clearly marked pre-release set of artifacts will unblock the next step

of testing.

Previous to this PR, `SandboxPolicy` was a bit difficult to work with:

237f8a11e1/codex-rs/core/src/protocol.rs (L98-L108)

Specifically:

* It was an `enum` and therefore options were mutually exclusive as

opposed to additive.

* It defined things in terms of what the agent _could not_ do as opposed

to what they _could_ do. This made things hard to support because we

would prefer to build up a sandbox config by starting with something

extremely restrictive and only granting permissions for things the user

as explicitly allowed.

This PR changes things substantially by redefining the policy in terms

of two concepts:

* A `SandboxPermission` enum that defines permissions that can be

granted to the agent/sandbox.

* A `SandboxPolicy` that internally stores a `Vec<SandboxPermission>`,

but externally exposes a simpler API that can be used to configure

Seatbelt/Landlock.

Previous to this PR, we supported a `--sandbox` flag that effectively

mapped to an enum value in `SandboxPolicy`. Though now that

`SandboxPolicy` is a wrapper around `Vec<SandboxPermission>`, the single

`--sandbox` flag no longer makes sense. While I could have turned it

into a flag that the user can specify multiple times, I think the

current values to use with such a flag are long and potentially messy,

so for the moment, I have dropped support for `--sandbox` altogether and

we can bring it back once we have figured out the naming thing.

Since `--sandbox` is gone, users now have to specify `--full-auto` to

get a sandbox that allows writes in `cwd`. Admittedly, there is no clean

way to specify the equivalent of `--full-auto` in your `config.toml`

right now, so we will have to revisit that, as well.

Because `Config` presents a `SandboxPolicy` field and `SandboxPolicy`

changed considerably, I had to overhaul how config loading works, as

well. There are now two distinct concepts, `ConfigToml` and `Config`:

* `ConfigToml` is the deserialization of `~/.codex/config.toml`. As one

might expect, every field is `Optional` and it is `#[derive(Deserialize,

Default)]`. Consistent use of `Optional` makes it clear what the user

has specified explicitly.

* `Config` is the "normalized config" and is produced by merging

`ConfigToml` with `ConfigOverrides`. Where `ConfigToml` contains a raw

`Option<Vec<SandboxPermission>>`, `Config` presents only the final

`SandboxPolicy`.

The changes to `core/src/exec.rs` and `core/src/linux.rs` merit extra

special attention to ensure we are faithfully mapping the

`SandboxPolicy` to the Seatbelt and Landlock configs, respectively.

Also, take note that `core/src/seatbelt_readonly_policy.sbpl` has been

renamed to `codex-rs/core/src/seatbelt_base_policy.sbpl` and that

`(allow file-read*)` has been removed from the `.sbpl` file as now this

is added to the policy in `core/src/exec.rs` when

`sandbox_policy.has_full_disk_read_access()` is `true`.

The saveConfig() function only includes a hardcoded subset of properties

when writing the config file. Any property not explicitly listed (like

disableResponseStorage) will be dropped.

I have added `disableResponseStorage` to the `configToSave` object as

the immediate fix.

[Linking Issue this fixes.](https://github.com/openai/codex/issues/726)

This PR adds a new CLI flag: `--reasoning`, which allows users to

customize the reasoning effort level (`low`, `medium`, or `high`) used

by OpenAI's `o` models.

By introducing the `--reasoning` flag, users gain more flexibility when

working with the models. It enables optimization for either speed or

depth of reasoning, depending on specific use cases.

This PR resolves#107

- **Flag**: `--reasoning`

- **Accepted Values**: `low`, `medium`, `high`

- **Default Behavior**: If not specified, the model uses the default

reasoning level.

## Example Usage

```bash

codex --reasoning=low "Write a simple function to calculate factorial"

---------

Co-authored-by: Fouad Matin <169186268+fouad-openai@users.noreply.github.com>

Co-authored-by: yashrwealthy <yash.rastogi@wealthy.in>

Co-authored-by: Thibault Sottiaux <tibo@openai.com>

When processing an `apply_patch` tool call, we were already computing

the new file content in order to compute the unified diff. Before this

PR, we were shelling out to `patch(1)` to apply the unified diff once

the user accepted the change, but this updates the code to just retain

the new file content and use it to write the file when the user accepts.

This simplifies deployment because it no longer assumes `patch(1)` is on

the host.

Note this change is internal to the Codex agent and does not affect

`protocol.rs`.

This PR adds a `debug landlock` subcommand to the Codex CLI for testing

how Codex would execute a command using the specified sandbox policy.

Built and ran this code in the `rust:latest` Docker container. In the

container, hitting the network with vanilla `curl` succeeds:

```

$ curl google.com

<HTML><HEAD><meta http-equiv="content-type" content="text/html;charset=utf-8">

<TITLE>301 Moved</TITLE></HEAD><BODY>

<H1>301 Moved</H1>

The document has moved

<A HREF="http://www.google.com/">here</A>.

</BODY></HTML>

```

whereas this fails, as expected:

```

$ cargo run -- debug landlock -s network-restricted -- curl google.com

curl: (6) getaddrinfo() thread failed to start

```

https://github.com/openai/codex/pull/642 introduced support for the

`--disable-response-storage` flag, but if you are a ZDR customer, it is

tedious to set this every time, so this PR makes it possible to set this

once in `config.toml` and be done with it.

Incidentally, this tidies things up such that now `init_codex()` takes

only one parameter: `Config`.

Originally, the `interactive` crate was going to be a placeholder for

building out a UX that was comparable to that of the existing TypeScript

CLI. Though after researching how Ratatui works, that seems difficult to

do because it is designed around the idea that it will redraw the full

screen buffer each time (and so any scrolling should be "internal" to

your Ratatui app) whereas the TypeScript CLI expects to render the full

history of the conversation every time(*) (which is why you can use your

terminal scrollbar to scroll it).

While it is possible to use Ratatui in a way that acts more like what

the TypeScript CLI is doing, it is awkward and seemingly results in

tedious code, so I think we should abandon that approach. As such, this

PR deletes the `interactive/` folder and the code that depended on it.

Further, since we added support for mousewheel scrolling in the TUI in

https://github.com/openai/codex/pull/641, it certainly feels much better

and the need for scroll support via the terminal scrollbar is greatly

diminished. This is now a more appropriate default UX for the

"multitool" CLI.

(*) Incidentally, I haven't verified this, but I think this results in

O(N^2) work in rendering, which seems potentially problematic for long

conversations.

* In both TypeScript and Rust, we now invoke `/usr/bin/sandbox-exec`

explicitly rather than whatever `sandbox-exec` happens to be on the

`PATH`.

* Changed `isSandboxExecAvailable` to use `access()` rather than

`command -v` so that:

* We only do the check once over the lifetime of the Codex process.

* The check is specific to `/usr/bin/sandbox-exec`.

* We now do a syscall rather than incur the overhead of spawning a

process, dealing with timeouts, etc.

I think there is still room for improvement here where we should move

the `isSandboxExecAvailable` check earlier in the CLI, ideally right

after we do arg parsing to verify that we can provide the Seatbelt

sandbox if that is what the user has requested.

Although we made some promising fixes in

https://github.com/openai/codex/pull/662, we are still seeing some

flakiness in `test_writable_root()`. If this continues to flake with the

more generous timeout, we should try something other than simply

increasing the timeout.

The existing `b` and `space` are sufficient and `d` and `u` default to

half-page scrolling in `less`, so the way we supported `d` and `u`

wasn't faithful to that, anyway:

https://man7.org/linux/man-pages/man1/less.1.html

If we decide to bring `d` and `u` back, they should probably match

`less`?

This changes how instantiating `Config` works and also adds

`approval_policy` and `sandbox_policy` as fields. The idea is:

* All fields of `Config` have appropriate default values.

* `Config` is initially loaded from `~/.codex/config.toml`, so values in

`config.toml` will override those defaults.

* Clients must instantiate `Config` via

`Config::load_with_overrides(ConfigOverrides)` where `ConfigOverrides`

has optional overrides that are expected to be settable based on CLI

flags.

The `Config` should be defined early in the program and then passed

down. Now functions like `init_codex()` take fewer individual parameters

because they can just take a `Config`.

Also, `Config::load()` used to fail silently if `~/.codex/config.toml`

had a parse error and fell back to the default config. This seemed

really bad because it wasn't clear why the values in my `config.toml`

weren't getting picked up. I changed things so that

`load_with_overrides()` returns `Result<Config>` and verified that the

various CLIs print a reasonable error if `config.toml` is malformed.

Finally, I also updated the TUI to show which **sandbox** value is being

used, as we do for other key values like **model** and **approval**.

This was also a reminder that the various values of `--sandbox` are

honored on Linux but not macOS today, so I added some TODOs about fixing

that.

- Introduce `isSandboxExecAvailable()` helper and tidy import ordering

in `handle-exec-command.ts`.

- Add runtime check for the `sandbox-exec` binary on macOS; fall back to

`SandboxType.NONE` with a warning if it’s missing, preventing crashes.

---------

Signed-off-by: Thibault Sottiaux <tibo@openai.com>

Co-authored-by: Fouad Matin <fouad@openai.com>

Adds support for reading OPENAI_API_KEY (and other variables) from a

user‑wide dotenv file (~/.codex.config). Precedence order is now:

1. explicit environment variable

2. project‑local .env (loaded earlier)

3. ~/.codex.config

Also adds a regression test that ensures the multiline editor correctly

handles cases where printable text and the CSI‑u Shift+Enter sequence

arrive in the same input chunk.

House‑kept with Prettier; removed stray temp.json artifact.

Addressing #600 and #664 (partially)

## Bug

Codex was staging duplicate items in output running when the same

response item appeared in both the streaming events. Specifically:

1. Items would be staged once when received as a

`response.output_item.done` event

2. The same items would be staged again when included in the final

`response.completed` payload

This duplication would result in each message being sent several times

in the quiet mode output.

## Changes

- Added a Set (`alreadyStagedItemIds`) to track items that have already

been staged

- Modified the `stageItem` function to check if an item's ID is already

in this set before staging it

- Added a regression test (`agent-dedupe-items.test.ts`) that verifies

items with the same ID are only staged once

## Testing

Like other tests, the included test creates a mock OpenAI stream that

emits the same message twice (once as an incremental event and once in

the final response) and verifies the item is only passed to `onItem`

once.

Previously, the Rust TUI was writing log files to `/tmp`, which is

world-readable and not available on Windows, so that isn't great.

This PR tries to clean things up by adding a function that provides the

path to the "Codex config dir," e.g., `~/.codex` (though I suppose we

could support `$CODEX_HOME` to override this?) and then defines other

paths in terms of the result of `codex_dir()`.

For example, `log_dir()` returns the folder where log files should be

written which is defined in terms of `codex_dir()`. I updated the TUI to

use this function. On UNIX, we even go so far as to `chmod 600` the log

file by default, though as noted in a comment, it's a bit tedious to do

the equivalent on Windows, so we just let that go for now.

This also changes the default logging level to `info` for `codex_core`

and `codex_tui` when `RUST_LOG` is not specified. I'm not really sure if

we should use a more verbose default (it may be helpful when debugging

user issues), though if so, we should probably also set up log rotation?